Inflation’s Effect on SaaS & ITO Operating Models – An Update

Who today has experienced a long-term economic inflationary period?

Inflation is very much in the U.S. news as it reaches 40-year highs. This means a person has to be near the end of their professional careers to have experienced the previous inflationary period. One of the authors dimly recalls his economics professors trying to parse what, at the time, was called stagflation, which impacted the United States in the 1970s. Oil price shocks drove up prices, while unemployment remained high. Inflation previously had been explained as too many dollars chasing too few goods and was generally assigned to economies overheating because of very low unemployment rates.

Today economists seek to assess economic fundamentals to predict whether this inflationary spike will be temporary or persistent. Factors suggesting a short-term spike revolve around the well-publicized supply chain disruptions coupled with record savings levels during the pandemic when discretionary spending on things like travel and restaurant meals was greatly hindered and retail spending shifted from in-store shopping to e-commerce.

On the other hand, some economists point to persistent government deficits due to pumping money into the economy. Given various regulatory and economic uncertainties, that money has been sitting on the sidelines. Further stock market run-ups in valuation have been attributed to investor money seeking higher returns that can be achieved in traditional savings and bond ownership because of low interest rates on these conservative investment instruments.

Partisans will selectively mention these factors to explain away or criticize the current economic climate. Businesses, on the other hand, have a recently dormant financial risk rearing its ugly head that can dramatically impact long-term financial forecasting.

So what are the technology company business models where inflation has near term impact?

Transaction-based businesses in the IT industry will be able to follow traditional methods of passing costs on to the customer. But, for those business units working from Anything as a Service (XaaS) subscription models, ITO contracts and infrastructure managed service agreements, the near-term impact could be more acute.

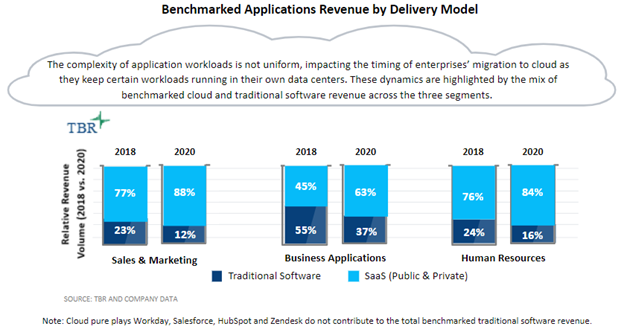

Cloud-enabled SaaS models are a relatively new phenomenon as Industry 4.0 gains momentum. Proponents of these business models also assert that legacy business model metrics and analysis do not apply given the majority of selling expenses are recognized in the first fiscal quarter of multiyear agreements while the revenue is then recognized ratably over the contract term. As such, the financial spokespeople for these business models lean heavily on relatively new business metrics — annual recurring revenue (ARR), net dollar revenue retention and lifetime customer value — that chart a forecast course for when operating profits will materialize.

ITO contracts have had a somewhat longer evolution, starting as multiyear deals where vendors could reap greater profits as operating costs declined due to the increased automation of the overall monitoring and maintenance. These contracts then moved to shorter-term durations and, more recently, have stipulated cost decreases over time such that any operating costs savings created by the vendor are passed along, or at least shared with, the customer. The ITO market has likewise seen a shift or rebranding of these customer offers into infrastructure managed services to pivot the contract model to be more in line with SaaS constructs.

When inflation was last a top-of-mind economic consideration, most IT was on premises and operated by company personnel. TBR seriously doubts strategic scenario planning for these new subscription consumption models prior to perhaps late 2020 anticipated the current inflationary levels and their potential operating impact.

What is the immediate inflationary risk to XaaS and ITO business models?

SaaS models take several years to generate profit in what is variously described as the flywheel effect or the force multiplier effect. Increased labor and utility costs beyond forecast and tethered to long-term contracts will add several percentage points of operating costs to these models. In this sense, the newer the SaaS operating model the less risk it will have to cost structure as it has less renewed revenue. TBR expects the more mature the SaaS model, and greater amount of accrued or committed revenue, the more adverse the bottom-line operating impact.

The ITO market, on the other hand, has shown persistent declines, resulting in consolidations and divestments to profitably manage eroding streams traditional ITO vendors seek to convert into managed services agreements. The inflation impact on costing will amplify the need to infuse these business practices with more automated capabilities or increased low-cost (typically offshore) labor as offsets. Still, the operating profit declines in this space will likely worsen unless vendors seek to negotiate incremental cost increases that customers may or may not be willing to accept based on their own issues with cost containment.

What go-forward tactics are in the technology vendor toolbox to mitigate inflationary impact?

Inflation is not new, but the operating models prevalent now were not around when we last experienced it. Business strategists still have a blend of initiatives they can embrace to preserve their operating models and their customer relationships:

- Market education: Transparent declarations on the cost impacts to the vendor business and any suggestions of sharing the burden with customers can preserve customer loyalty.

- Customer research in existing brand perception. The XaaS Pricing team has a very good blog outlining the Van Westendorp Price Sensitivity Meter and its applicability setting B2B SaaS pricing strategy. That research methodology can assist vendors in level setting where they stand with customers on the value perception and give pricing strategists a line of sight into how much room they have within their brand perception for implementing price increases.

- New contract language for price increases: The historic quiet period on inflation, coupled with the innate reality within technology of “faster, better, cheaper,” has customers expecting price reductions for IT that will require true customer education around inflation as an offset to those prevailing market expectations. This will not help with the inflationary impacts on the existing contracts that must be honored, but can establish a new go-forward pricing model that can take into account a business risk largely dormant for the better part of 40 years.

Inflation as a business risk will persist for the foreseeable future. TBR will be assessing it closely as public companies report their earnings and release their financial filing documents.