Since Arvind Krishna took the helm as CEO in April, IBM has engaged in a series of acquisitions and partnerships to support its transformative shift to fully embrace an open hybrid cloud strategy. The company is further solidifying the strategy with the announcement that IBM and Palantir are coming together in a partnership that combines AI, hybrid cloud, operational intelligence and data processing into an enterprise offering. The partnership will leverage Palantir Foundry, a data integration and analysis platform that enables users to easily manage and visualize complex data sets, to create a new solution called Palantir for IBM Cloud Pak for Data. The new offering, which will be available in March, will leverage AI capabilities to help enterprises further automate data analysis across a wide variety of industries and reduce inherent silos in the process.

Combining IBM Cloud Pak for Data with Palantir Foundry supports IBM’s vision of connecting hybrid cloud and AI

A core benefit that customers will derive from the collaboration between IBM (NYSE: IBM) and Palantir (NYSE: PLTR) is the easement of the pain points associated with adopting a hybrid cloud model, including integration across multiple data sources and the lack of visibility into the complexities of cloud-native development. By partnering with Palantir, IBM will be able to make its AI software more user-friendly, especially for those customers who are not technical by nature or trade. Palantir’s software requires minimal, if any, coding and enhances the accessibility of IBM’s cloud and AI business.

According to Rob Thomas, IBM’s senior vice president of software, cloud and data, the new offering will help to boost the percentage of IBM’s customers using AI from 20% to 80% and will be sold to “180 countries and thousands of customers,” which is “a pretty fundamental change for us.” Palantir for IBM Cloud Pak for Data will extend the capabilities of IBM Cloud Pak for Data and IBM Cloud Pak for Automation, and according to a recent IBM press release, the new solution is expected to “simplify how businesses build and deploy AI-infused applications with IBM Watson and help users access, analyze and take action on the vast amounts of data that is scattered across hybrid cloud environments, without the need for deep technical skills.”

By drawing on the no-code and low-code capabilities of Palantir’s software as well as the automated data governance capabilities embedded into the latest update of IBM Cloud Pak for Data, IBM is looking to drive AI adoption across its businesses, which, if successful, can serve as a ramp to access more hybrid cloud workloads. IBM perhaps summed it up best during its 2020 Think conference, with the comment: “AI is only as good as the ecosystem that supports it.” While many software companies are looking to democratize AI, Red Hat’s open hybrid cloud approach, underpinned by Linux and Kubernetes, positions IBM to bring AI to chapter 2 of the cloud.

For historical context, it is important to remember that the acquisition of Red Hat marked the beginning of IBM’s dramatic transformation into a company that places the values of flexibility, openness, automation and choice at the core of its strategic agenda. IBM Cloud Paks, which are modular AI-powered solutions that enable customers to efficiently and securely move workloads to the cloud, have been a central component of IBM’s evolving identity.

After more than a year of messaging to the market the critical role Red Hat OpenShift plays in IBM’s hybrid cloud strategy, Big Blue is now tasked with delivering on top of the foundational layer with the AI capabilities it has been tied to since the inception of Watson. By leveraging the openness and flexibility of OpenShift, IBM continues to emphasize its Cloud Pak portfolio, which serves as the middleware layer, allowing clients to run IBM software as close or as far away from the data as they desire. This architectural approach supports IBM’s cognitive applications, such as Watson AIOps and Watson Analytics, while new integrations, such as those with Palantir Foundry will support the data integration process for customers’ SaaS offerings.

The partnership will provide IBM and Palantir with symbiotic benefits in scale, customer reach and capability

The partnership with IBM is a landmark relationship for Palantir that provides access to a broad network of internal sales and research teams as well as IBM’s expansive global customer base. To start, Palantir will now have access to the reach and influence of IBM’s Cloud Paks sales force, which is a notable expansion from its current team of 30. The company already primarily sells to companies that have over $500 million in revenue, and many of them already have relationships with IBM. By partnering with IBM, Palantir will not only be able to deepen its reach into its existing customer base but also have access to a much broader customer base across multiple industries. The partnership additionally provides Palantir with access to the IBM Data Science and AI Elite Team, which helps organizations across industries address data science use cases as well as the challenges inherent in AI adoption.

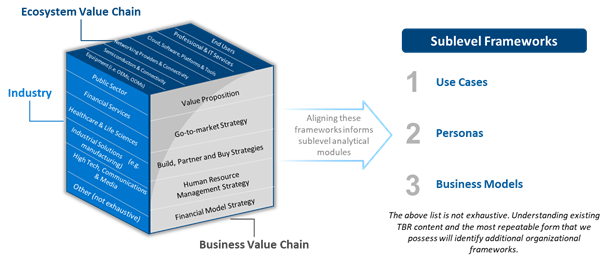

Partners such as Palantir support IBM, including by helping the company scale Red Hat software and double down on industry cloud efforts

As a rebrand of its partner program, IBM unveiled the Public Cloud Ecosystem program nearly one year ago, onboarding key global systems integrators, such as inaugural partner Infosys, to push out IBM Cloud Paks solutions to customers on a global scale. As IBM increasingly looks up the technology stack, where enterprise value is ultimately generated, the company is emphasizing the IBM Cloud Pak for Data, evidenced by the November launch of version 3.5 of the solution, which offers support for new services.

In addition, IBM refreshed the IBM Cloud Pak for Automation while integrating robotic process automation technology from the acquisition of WDG Automation. Alongside the product update, IBM announced there are over 50 ISV partners that offer services integrated with IBM Cloud Pak for Data, which is also now available on the Red Hat Marketplace. IBM’s ability to leverage technology and services partners to draw awareness to its Red Hat portfolio has become critical and has helped accelerate the vendor’s efforts in industry cloud following the launch of the financial services-ready public cloud and the more recent telecommunications cloud. New Cloud Pak updates such as these highlight IBM’s commitment to OpenShift as well as its growing ecosystem of partners focused on AI-driven solutions.

Palantir’s software, which serves over 100 clients in 150 countries, is diversified across various industries, and the new partner solution will support IBM’s industry cloud strategy by targeting AI use cases. Palantir for IBM Cloud Pak for Data was created to mitigate the challenges faced by multiple industries, including retail, financial services, healthcare and telecommunications — in other words, “some of the most complex, fast-changing industries in the world,” according to Thomas. For instance, many financial services organizations have been involved in extensive M&A activity, which results in a fragmented and dispersed environment involving multiple pools of data.

Palantir for IBM Cloud Pak for Data will remediate associated challenges with rapid data integration, cleansing and organization. According to IBM’s press release, Guy Chiarello, chief administrative officer and head of technology at Fiserv (Nasdaq: FISV), an enterprise focused on supporting financial services institutions, reacted positively to the announcement, stating, “This partnership between two of the world’s technology leaders will help companies in the financial services industry provide business-ready data and scale AI with confidence.”