Infosys Collaborates with Clients and Partners to Navigate What’s Next in Their AI Transformation Programs

Strong Services Execution, Enabled Through Infosys Cobalt and Focused on Outcomes, Provides Foundation Upon Which Infosys Can Build AI Strategy

The steady performance of Infosys’ cloud business highlights the company’s pragmatic approach to its portfolio and go-to-market efforts, largely enabled by Infosys Cobalt.

Building on Infosys Cobalt’s success, the company now has an opportunity to steer client conversations toward AI and is positioning Infosys Topaz as the suite of services and solutions that can bring it all together. Agentic AI (i.e., autonomous AI) is the newest set of capabilities dominating client and partner conversations. Scaling AI adoption comes with implications and responsibilities, which Infosys is trying to address one use case at a time. For example, earlier in 2024, Infosys launched the Responsible AI Suite, which includes accelerators across three main areas: Scan (identifying AI risk), Shield (building technical guardrails) and Steer (providing AI governance consulting). These capabilities will help Infosys strengthen ecosystem trust via the Responsible AI Coalition. Infosys also claimed it was the first IT services company globally to achieve the ISO 42001:2023 certification for ethical and responsible use of AI.

Regardless of the client’s cloud and AI adoption maturity, everyone TBR spoke with and those who presented at 2024 Infosys Americas Confluence agreed that the need for data strategy and architecture comes first. Two separate customers perfectly summarized the state of AI adoption: “You can’t get to AI without reliable data across the supply chain,” and “GenAI is not a magical talisman. Companies need to build true AI policy and handle GenAI primitives before scaling adoption, with the shift in mindset among developers and users a key component.”

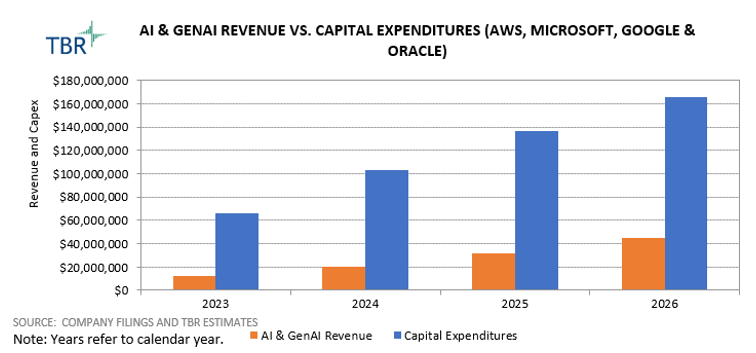

Infosys recognizes that AI adoption will come in waves. The first wave, which started in November 2022 and continued over the last 18 to 24 months, was dominated by pilot projects focused on productivity and software development. In the current second wave, clients are starting to pivot conversations toward improving IT operations, business processes, marketing and sales. The real business value will come from the third wave, which will focus on improving processes and experiences and capitalizing on opportunities around design and implementation. Infosys believes the third wave will start in the next six to 12 months. While this might work for cloud- and data-mature clients, only a small percentage of the enterprise is AI ready across all components including data, governance, strategy, technology and talent. Thus, it might take a bit longer scale for AI adoption to scale.

But as Infosys continues to execute its pragmatic strategy, the company relies on customer success stories that will help it build momentum. As another customer positioned it, “Infosys knows the data and processes. They know what they are talking about. In [the] 11 years since we have worked with them, they have not missed a single release with their … team delivering the outcomes.”

We believe Infosys’ position within the ecosystem will also play a role in how fast and successful the company is when it comes to scaling AI with clients. Infosys’ AI-related messaging includes 23 AI playbooks, which focus on value realization spanning technical and business components, such as Foundry and Factory models, as well as change management.

Of course, AI and GenAI will also disrupt Infosys’ business model and service delivery. And while many of its peers are still debating internally how to best position themselves with clients and pitch the value of GenAI without exposing their business to too much risk in the long run, Infosys’ thoughtful, analytics-enabled approach to commercial and pricing model management has positioned the company favorably with price-conscious clients that have predominantly been focused on digital stack optimization over the past 18 months.

Infosys’ success with large deals is a testament to the effectiveness of the company’s strategy. In FY4Q24 Infosys had $4.5 billion in large deals, which is the highest quarterly large deal value for the company. In addition, investing in and transforming right-skilled talent who can support this model are critical components to the company’s success. While Infosys has trained 270,000 of its employees on AI, we believe it is the composition and depth of these skills that vary across service lines and clients, especially as outcome-based pricing models now represent half of the contracts in some service lines.

Infosys’ Investments in Engineering and Marketing Strengthen Company’s Position as a Solutions Broker

Navigating the hype of GenAI requires Infosys to also recognize and place bets on other areas that are tangential and have a more immediate impact on its value proposition and overall financial performance.

Infosys Tries to Bring CIOs and CMOs Together Through Infosys Aster

Building off the success of Infosys Cobalt and Infosys Topaz, the company launched Infosys Aster, a set of AI-amplified marketing services, solutions and platforms. While Infosys Cobalt and Infosys Topaz have horizontal applications, the domain-specific nature of Infosys Aster provides a glimpse into what we might expect to see from Infosys in the near future, given the permeation of GenAI across organizational processes. Additionally, the marketing orientation of Infosys Aster is not surprising since most GenAI use cases are geared toward improving customer experience.

Built around three pillars — experience, efficiency and effectiveness — Infosys Aster will test Infosys’ ability to capitalize on a new wave of application services opportunities and create first-party data-unique solutions rather than providing off-the-shelf solutions just to ramp up implementation sales.

With DMS continuing to act as a conduit for broader digital transformation opportunities for Infosys, we expect the company to use Infosys Aster to position its marketing services portfolio in a more holistic manner, creating a bridge between CMOs and CIOs and also bringing parts of Infosys’ Business Process Management subsidiary into the mix to position the company to capture marketing operations opportunities. Infosys Aster provides a comprehensive set of marketing across the value chain of strategy, brand and creative services, digital experience, digital commerce, marketing technology (martech), performance marketing and marketing operations.

Although this is an area of opportunity for Infosys, rivals such as Accenture have an advantage in the marketing operations domain. We do believe the greater opening for Infosys comes from focusing more on driving conversations around the custom application layer and steering client discussions toward achieving profitable growth through the use of Infosys Aster. Client wins such as with Formula E and ongoing work with the Grand Slam tennis tournaments also allow Infosys to demonstrate its innovation capabilities beyond traditional IT services. Part marketing and part branding, wins such as these elevate Infosys’ capabilities. Executing against its messaging is key for Infosys.

Infosys Engineering Services Will Close Portfolio and Skills Gaps Between IT and OT Departments

Infosys Engineering Services remains among the fastest-growing units within the company as Infosys strives to get closer to product development and minimize GenAI disruption on its content distribution and support position. Since the 2020 purchase of Kaleidoscope, which provided a much-needed boost for the company to infuse new skills and the IP needed to appeal to the OT buyer, Infosys has further enhanced its value proposition to also meet GenAI-infused demand.

Infosys recently announced the acquisition of the India-based, 900-person semiconductor design services vendor InSemi, which presents a use case where the company applied a measured risk approach to enhance its chip-to-cloud strategy as it tries to balance its portfolio of partner-ready solutions, such as through NVIDIA, with a sound GenAI-first cloud-supported story. Shortly after, Infosys also acquired Germany-headquartered engineering R&D services firm in-tech. The purchase will bolster Infosys’ Engineering Services R&D capabilities and add over 2,200 trained resources to regional operations across Germany, Austria, China, the U.K., and nearshore locations in the Czech Republic, Romania, Spain and India, supporting Infosys’ opportunities within the automotive industry. The purchase of in-tech certainly accelerates these opportunities, bringing in strong relationships with OEM providers, which is a necessary steppingstone as Infosys tries to bridge IT and OT relationships.

We do not expect Infosys’ cloud business Infosys Cobalt to slow down anytime soon given the company’s market position for infrastructure migration and managed services as well as its well-run partner strategy with hyperscalers. Adding semiconductor design services bolsters that value proposition as buyers consider whether to use price-attractive CPUs or premium-priced GPU data centers. The latter currently dominates the marketplace, and we expect that trend will not change for at least the next 18 to 24 months. But having semiconductor engineers on its bench can help Infosys start supporting CPU-run models, further appealing to more price-sensitive clients. Meanwhile, Infosys is planning to train 50,000 of its employees on NVIDIA technologies. Lastly, the close collaboration between Infosys Engineering Services and Infosys Living Labs further extends the company’s opportunities to drive conversations with new buyers and demonstrates its ability to build, integrate and manage tangible products.

Infosys’ Reliance on Partners Provides a Strong Use Case of Trust and the Future of Ecosystems

The mutual appreciation between Infosys and partners was amplified throughout 2024 Infosys Americas Confluence. From a dedicated Partner Day to partner-run demos and various sponsorship levels to main-stage presentations, the experience reminded TBR of an event that a technology vendor would typically set up (think: Adobe Summit, AWS re:Invent, Dreamforce, Oracle OpenWorld, to name a few).

Infosys’ decision to feature some of its key alliance partners in a similar way that the tech companies do suggests a strong alignment between parties starting with the top-down executive support, through mutual investments in both portfolio and training resources, and most importantly, knowledge management between the parties. In conversations throughout the event with partners, it was evident that Infosys’ strategy is consistent regardless of the length of relationship, from decades-long relationships such as with SAP or an emerging but fast-growing alliance such as with Snowflake. All partners agreed Infosys’ humble approach to managing relationships has put them at ease in working with Infosys and delivering value to joint clients.

After attending Infosys’ U.S. Analyst and Advisor Meeting in Texas in March, TBR wrote about Infosys’ relationship with Oracle, highlighting the level of trust and transparency Infosys typically deploys with partners. In TBR’s Summer 2024 Voice of the Partner Ecosystem Report we wrote: “Services vendors most frequently rely on their direct sales efforts and permission to demonstrate value with customers to drive revenue. Using demos and proof-of-concept discussions as a frequent tactic to engage with clients also highlights many of the profiled vendors’ consulting heritage.

The technical expertise came through very vividly and aligned with Infosys’ strengths in playing within its own swim lane. In a main-stage discussion, Infosys and Hewlett Packard Enterprise (HPE) discussed at length the role each plays in pursuing opportunities in areas such as GenAI and the need for greater interactions through multiparty model including the value NVIDIA brings to the table, for example. While one could argue that Infosys’ alliance partner strategy mirrors that of many of its competitors as it seeks to secure foundational revenue opportunities while pursuing innovation through a measured risk approach, the company strives to differentiate by acknowledging its strengths and sticking to them rather than branching too far into partners’ territory, which enterprise buyers strongly appreciate.

Land-and-execute Approach and Expansion Will Follow Naturally

Close to a decade ago, TBR analyzed what Infosys’ five-year strategy should look like. While the company went through leadership and strategy changes during this period to such an extent that one could cite concerns about consistency, those days are over. Infosys now has a well-grounded strategy with executives executing on a clear vision rooted in a land-and-execute approach rather than the typical land-and-expand framework many of its peers aspire to. This puts greater pressure on the company’s quality and talent-retention strategies. While no one is immune to macroeconomic headwinds, the internal growth and training opportunities the company provides for its employees across all levels provides a strong backbone to a culture of learning and trust.

TBR will continue to cover Infosys within the IT services, ecosystems, cloud and digital transformation spaces, including publishing quarterly reports with assessments of Infosys’ financial model, go-to-market, and alliances and acquisitions strategies. Access reports as soon as they’re available with TBR Insight Center™ access.