MongoDB Is Positioning as the Standard Platform for Next-generation Apps

At our first MongoDB, Inc. event, MongoDB.local, TBR was able to learn firsthand about MongoDB’s evolution from an operational database to a developer platform for modern apps. The keynote, breakout sessions, executive Q&A and customer stories reinforced the value of MongoDB’s widely accepted document architecture, which is already powering many enterprise applications today and will propel MongoDB in its efforts to build new developer capabilities and cutting-edge applications. Partners, including all three major hyperscalers and SIs, had a significant presence at the event, speaking to MongoDB’s ability to tap into a growing ecosystem of hyperscalers and global system integrators (GSIs) eager to harness a technology-agnostic data layer for generative AI (GenAI) leadership.

TBR Perspective

In his opening keynote, MongoDB (Nasdaq: MDB) CEO Dev Ittycheria put GenAI in perspective, offering a realistic point of view that while GenAI may help draft emails, it is premature to be used in truly transformative ways. In other words, there is no comparison between the rise of GenAI and the internet boom and subsequent evolution of brands like Amazon (Nasdaq: AMZN), Netflix (Nasdaq: NFLX), Google (Nasdaq: GOOG) and Uber (NYSE: UBER), which launched new business models, elevated consumer experiences and completely transformed markets.

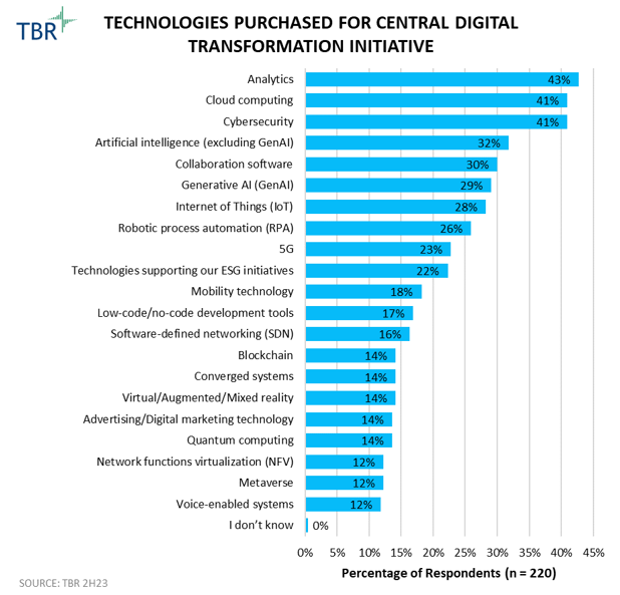

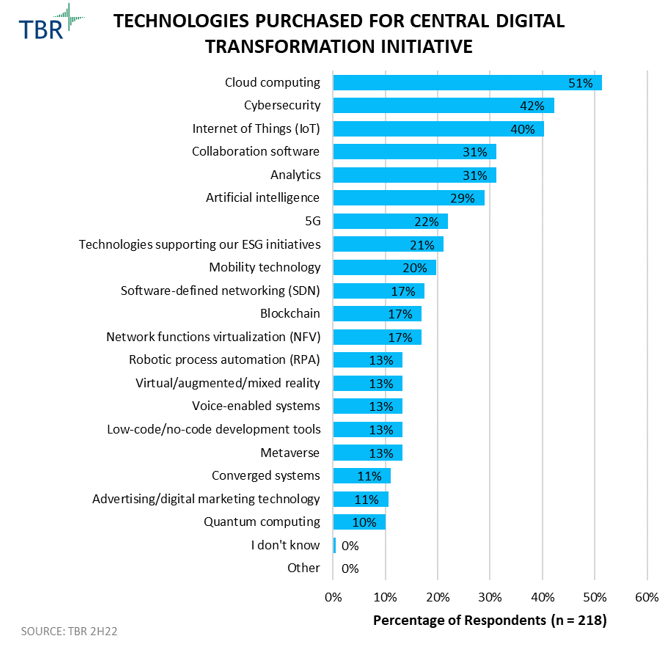

We see his point, as our own Cloud Infrastructure & Platforms Customer Research continues to reveal that back-office tasks like customer support and administration are the most common GenAI use cases. We also share his optimism that GenAI will help streamline developer productivity and thus pave the way for a new set of applications that are actually disruptive, not only in how they enhance productivity and cut costs but also in how they transform business operations.

MongoDB humbly recognizes that becoming the standard platform to enable this next wave of apps may be a longer-term play, as we are still only scratching the surface of GenAI’s potential. The first players to recognize the benefit will be those that enable, provide and host large language models (LLMs), namely NVIDIA (Nasdaq: NVDA) and the hyperscalers. But the next set of companies to benefit will be platforms like MongoDB, and by honing the document database architecture, relationships with the hyperscalers and an R&D engine catered to the developer experience, MongoDB is positioning to become the standard developer platform for GenAI.

MongoDB has solidified itself as the most popular NoSQL database but quickly realized that to handle the demands of complex applications, developers need broader functionality than what a core operational database offers. Adding features like Atlas Search and Time Series collections to its document database has allowed MongoDB to deliver a more complete, integrated platform and grow mindshare with the 175,000 developers who try MongoDB for the first time each month.

Naturally, MongoDB has recently focused on adding features for GenAI as part of its longer-term play to power next-gen apps, as evidenced by MongoDB’s launch of Atlas Vector Search last year. Other cloud database vendors also offer vector search, which retrieves information from unstructured data. Enhanced features like Vector Search are also key to the allure of Atlas, the fully managed, scalable, multicloud offering of MongoDB’s platform that is an alternative to Enterprise Advanced (EA) and Community Server, the free version of MongoDB.

Since Atlas launched in mid-2016, MongoDB has been actively leveraging GSI partners, its own professional services division and a series of code conversion tools as part of the “technology + process + people” methodology. This approach helps customers transition from on-premises MongoDB products, as well as external relational databases like Oracle and Microsoft SQL Server, to Atlas.

These initiatives, coupled with go-to-market support from the hyperscalers eager to have a feature-rich platform on their infrastructure, are making MongoDB largely synonymous with Atlas. With over 46,000 customers, Atlas now accounts for 66% of MongoDB’s total revenue and is available in 117 cloud regions across Amazon Web Services (AWS), Microsoft Azure and Google Cloud Platform (GCP).

MongoDB Rallies Ecosystem Around its Evolving Developer Platform to Power Next-gen Apps

A prerequisite for any successful platform company, MongoDB partners with all the major hyperscalers, tapping into their vast infrastructure scale, customer bases and native toolsets, to attract new workloads. Looking to make Atlas the foundational data layer for GenAI in the cloud, MongoDB has made its Vector Search capability available with all the hyperscalers’ GenAI hosting services, including Amazon Bedrock, Google Vertex AI and Azure OpenAI Service.

Vector search is by no means a unique capability in the age of GenAI. Hyperscalers are also using vector search in their own databases, but the vast amount of operational data already sitting within MongoDB will be a key consideration for customers that would otherwise consider a stand-alone vector database.

Meanwhile, integrating with the hyperscalers’ code assistants and copilots to abstract complexity and make it easier for developers to build on MongoDB will be a key component of the partner strategy. From a go-to-market perspective, cloud marketplaces will remain key to MongoDB’s success, as they give customers a way to consume Atlas as part of their existing cloud commitments.

MongoDB has added hundreds of customers by incentivizing direct sales teams to land more self-service customers on the hyperscalers’ marketplaces, an approach that will serve as a productivity lever by freeing up strategic resources for top enterprise accounts with a focus on onboarding net-new workloads.

Key Takeaways From Each of MongoDB’s Hyperscaler Relationships:

- AWS: Over the years, we have seen AWS become more welcoming of ISV solutions that overlap with its own native services, provided those solutions land on AWS infrastructure. This shift has benefited partners. For example, MongoDB is delivering integrations with AWS, including Atlas Vector Search with Amazon Bedrock, which allows for the customization of models in Bedrock using data stored as vector embeddings in MongoDB. Developer empowerment has always been a trademark for AWS and with Bedrock, AWS’ goal is to democratize GenAI, making it accessible to not only the largest corporations but also startups, which closely aligns with MongoDB’s value proposition. Meanwhile, after experiencing triple-digit growth in the active number of customers, the expansiveness of the AWS Marketplace is unmatched and continues to be an investment focus for the cloud giant, more recently in the form of new APIs, IaC (Infrastructure as Code) assets and lower prices.

- Microsoft: Microsoft (Nasdaq: MSFT) has taken a large stake in the legacy database market, and with roughly 20% of new Mongo EA business coming from applications migrated from legacy relational databases, MongoDB has arguably a more contentious relationship with Azure compared to that with the other hyperscalers. However, as highlighted during a one-on-one chat between Ittycheria and Microsoft VP of Global ISV Solutions Alvaro Celis, over the past two years Microsoft has become more effective at embracing the platform mindset to make Azure the de facto platform for enterprise data estates. Like AWS, Microsoft had a shift in mindset to overcome when it comes to partners; for Microsoft, it was recognizing that if it wanted to truly be a prominent cloud platform company, it had to offer customers maximum choice. We are now seeing this approach facilitate ISV activity and pave the way for MongoDB to align with Azure more closely in areas like engineering to improve the Atlas on Azure experience and deliver more integrations with Azure data services, including Microsoft Fabric. MongoDB’s primary focus is becoming a standard for the enterprise at the operational data layer, and the company appears to recognize the value of staying in its own swim lane. Having partners deliver solutions like Fabric that address the upper-level analytics components of the stack and help make MongoDB part of a more complete solution is important and adds a level of differentiation to the Azure-MongoDB relationship that may not exist for other hyperscalers that have yet to adopt Microsoft’s platform-first mindset.

- Google Cloud: At the event, MongoDB and Google Cloud announced an expansion of their alliance to optimize Google Cloud’s Gemini Code Assist for MongoDB. This announcement comes as Gemini replaces DuetAI throughout the entire GCP portfolio. MongoDB already optimized AWS’ code assistant Code Whisperer by using a customized foundation model so Code Whisperer can make suggestions specific to MongoDB use cases. While the technical relationship is less integrated, the coselling relationship has been well-developed for some time now and is poised for rapid growth given Google Cloud’s audience of born-in-the-cloud developers and increasing focus on empowering developers, the bread and butter of MongoDB.

New MongoDB Services Program Unites Major Players Across the GenAI Stack

One of the top announcements at MongoDB.local was the launch of the MongoDB AI Applications Program (MAAP), a high-touch services offering designed to make it easier for customers to build modern applications. Launch partners include AWS, Microsoft and GCP, as well as LLM providers like Anthropic and Cohere. Collectively, technology partners will align their technology with MongoDB Professional Services through a series of hands-on engagements like prototyping sessions and hackathons, both of which are good ways to engage developers and generate pipeline.

Despite all the hype, many customers are still looking for ways to get started with GenAI, and MAAP will be a good way to unite the multiple vendors that MongoDB customers will ultimately use for their GenAI projects. When customers do start taking advantage of GenAI, they will also look to tie the technology to a specific business outcome and work backward from that outcome. This is where the industry and business focus of the SIs come in, and they will undoubtedly play a role in MAAP, supplementing MongoDB Professional Services.

MongoDB does work with Tier 1 GSIs like Accenture (NYSE: ACN) and Infosys (NYSE: INFY) but has long adopted a strategy of taking a stake in boutique SIs and scaling them over time. This strategy has a lot of merit, especially as our findings continue to reveal that while the GSIs are excited about the GenAI opportunity, they appear to view data architecture as a pass-through layer to higher-value components of the stack like analytics.

This may change as customers recognize the symbiotic relationship between data strategy and GenAI, but GSIs seemingly less interested in dedicating resources to a close-to-the-box data platform will appreciate having a niche SI train Tier 1 consultants on MongoDB. This expected rise in interest level will increase the likelihood that these smaller firms will get acquired by the likes of Accenture, creating new opportunities for MongoDB to expand its relationship with a brand-name professional services firm and tap into its enterprise base.

Conclusion

MongoDB.local NYC told a story of continued product and go-to-market execution, with a meticulous focus on improving developer experiences and driving a more cohesive partner ecosystem inclusive of technology and services players.

While the event showcased new partner integrations and Atlas feature updates, it did not highlight any major strategic changes, adding a refreshing and humble tone to the event that suggests MongoDB understands the role it plays and will continue to play in the GenAI revolution.

While in some ways waiting for the hyperscalers’ and subsequent customer investments in GenAI to materialize, MongoDB is actively developing and integrating with partners, recognizing that over time, it stands to benefit as customers look for a neutral platform to develop a new set of modern, disruptive applications.