Executing on its Collective Strategy through integrated scale and backed by robust strategic partnerships and platform-enabled services positions KPMG to remain a formidable competitor in the transforming professional services market

KPMG Global Chairman and CEO Bill Thomas kicked off the firm’s 2025 Global Analyst Summit by reinforcing the firm’s mission to be “the most trusted and trustworthy professional services firm.” As we have discussed at length across TBR’s professional and IT services research, firms like KPMG trade on trust with clients, alliance partners and employees. Putting a stake in the ground from the get-go provided Thomas and KPMG’s executives a strong foundation to rely on during the next two days as trust — at the human and technology level — was an underlying theme during presentations and demos.

As a member of the Big Four, KPMG has brand permission and a breadth of services that are relevant to nearly every role in any enterprise. As the firm executes on its Collective Strategy, TBR believes KPMG will accelerate the scale and completeness of its offerings, building on a solid foundation and expanding the gaps between KPMG and other consulting-led, technology-enabled professional services providers.

KPMG’s global solutions — Connected, Powered, Trusted and Elevate — which resonate with clients and technology partners, have now been brought together into one transformation framework under KPMG Velocity, providing KPMG’s professionals with clear insight into the firm’s strengths and strategy, and underpinning, in the near future, all KPMG’s transformation engagements. KPMG Velocity’s evolving strategy will challenge KPMG’s leaders to execute on the promise of that transformation during the next wave of macroeconomic pressures, talent management battles and technology revolutions. At the same time, KPMG’s leaders recognize that their priorities are transforming the firm’s go-to-market approach, unlocking the power of the firm’s people, reimagining ways of working, and innovating capabilities and service enhancements.

Success in executing these priorities, in TBR’s view, will come as KPMG shifts from building a foundation to scaling alongside the growing needs of its clients and as the era of GenAI presents yet another opportunity and challenge. Striking the right balance between elevating the potential of GenAI as a value creator and accounting for commercial and pricing model implications will test the durability of KPMG’s engagement and delivery frameworks.

Although the firm has placed in motion many of the aforementioned investments over the past 12 to 18 months, the one opportunity that is changing relates to speed. As one enterprise buyer recently explained to TBR: “GenAI will force all services vendors to change. The [ones] who [will] be [the] most successful will be [those] who do it fast.” With speed comes risk — which KPMG fully acknowledges and is why KPMG Velocity’s offering is a differentiator for the firm in the market. With KPMG Velocity, all of KPMG’s multidisciplinary and heritage risk and regulatory considerations have been embedded across each transformation journey to ensure clients can remain compliant and avoid the pitfalls that can often arise during transformation.

Continuing the firm’s presentation, Thomas outlined KPMG’s evolving Collective Strategy, noting that the firm is 18 months into its latest iteration focused on “accelerating trust and growth.” Among the key enablers of achieving this goal is KPMG’s collapsing of its organizational structure from 150 country-specific member firms to a cluster of 30 to 40 regionally organized “economic units.” TBR views this pivot as the most natural evolution of KPMG’s operating model. For the Big Four, the biggest challenge is how to demonstrate value through integrated scale. Once completed, the reorganization will allow KPMG to minimize such disruption and better compete for globally sourced opportunities from what the firm calls “transactions to transformation” and for large, multi-year, geographically dispersed enterprise, function and foundational transformations.

Following Thomas’ presentation, Carl Carande, KPMG U.S. & global lead, Advisory, and Regina Mayor, global head of Clients & Markets, amplified KPMG’s strategy, reinforcing the importance of the firm’s people, technology partners and technology — with AI the catalyst and change agent of success. For example, Carande recognized the technology relationships are changing in two ways. Relationships are becoming more exclusive, and the multipartner alliance framework offers a multiplier power — themes TBR has discussed at length throughout our Ecosystem Intelligence research stream.

Although KPMG continues to manage a robust network of alliance partners, highlighting its seven strategic partners — Google Cloud, Microsoft, Oracle, Salesforce, SAP, ServiceNow and Workday — solidifies its recognition of these vendors’ position throughout the ecosystem. Mayor expanded on Carande’s discussion around alliances through an industry lens, describing “alliance partners leaning in with KPMG” as they realize efforts to only sell the product will be insufficient. Meanwhile, on the KPMG side, alliance sales partners help figure out how to penetrate sector-specific alliance relationships.

Taking such a systematic approach across KPMG’s 7 sectors (with the desire to expand these to 14) will allow the firm to demonstrate value and support its evolving Collective Strategy to act as a globally integrated firm. Additionally, new offerings like KPMG Velocity (discussed in depth on Slide 6) will arm KPMG’s consultants with the necessary collective knowledge management to serve global clients locally, further supporting the firm’s strategy.

One could argue that many of KPMG’s steps, including launching partner-enabled industry IP, reinforcing trust, developing regionally organized operations, outlining a select few strategic partners, and investing in platform-enabled service delivery capabilities, resonate with the moves taken by many of its Big Four and large IT services peers. We see two differences.

First, KPMG is laser-focused on exactly which of the strategies above to amplify, rather than taking a trial-and-error approach. Second, it is about timing. Some of KPMG’s peers have tried these strategies for some time, with limited success because of poor execution or timing. We believe that as the professional services market goes through its once-in-a-century transformation, KPMG has an opportunity to ride the wave, provided it maintains internal consensus and executes on its operational and commercial model evolution with minimal disruption.

KPMG’s evolution will largely stem from orchestrating alliances with seven strategic technology partners

At the event, KPMG asserted the role of tech alliance partners in building the “firm of the future.” Although the firm works with a range of ISVs, a targeted focus on the firm’s seven strategic technology partners has become key to the company’s growth profile — with 50% of its consulting business alliance-enabled in the U.S. — and, as the case of previous audit client SAP shows, KPMG has been able to overcome barriers to ultimately help clients get the most out of technology. The firm’s approach of leading with client outcomes first and technology second is unchanged, but prioritizing a tech-enabled go-to-market approach will support KPMG’s position in the market behind two major trends.

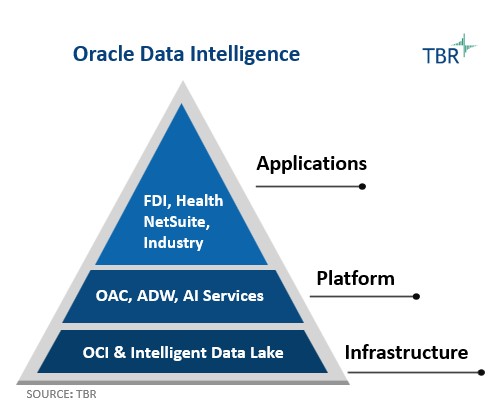

The first trend is the overall maturation in partner alliance structures we see from the cloud vendors. Changes in programmatic structure, including bringing sales and partner delivery closer together, and an all-around shift in how partners are viewed among historically siloed vendors, could act as enablers for KPMG’s newer capabilities, including Velocity. Second, there is a big paradigm shift underway on where the value of tech exists. Increasingly, we see the firm moving down the stack, a trend enabled by agentic AI and customers’ need to harness their own data and build new applications. Across the Big Seven, there is no shortage of innovation. As the value of AI shifts down the technology stack, KPMG can leverage the technology to deliver business outcomes to clients.

To fully describe KPMG’s evolving technology alliance strategy and the firm’s growing capabilities, KPMG leaders hosted a panel discussion that included leaders from Microsoft, SAP, Salesforce and KPMG clients. Todd Lohr, KPMG’s head of Ecosystems for Advisory, set the stage by saying the firm views ecosystems as more than simply a collection of one-to-one alliances, but ecosystems are, instead, many-to-many relationships, an idea TBR has increasingly heard expressed by consultancies, IT services companies, hyperscalers and software vendors.

Having leaders from technology partners on stage to display a very common example of a tech stack — with SAP as the system of record (SOR), Salesforce in the front office, and Microsoft as the platform with Copilot — was a strong way to depict the “many-to-many relationships” structure and KPMG’s role in orchestrating the ecosystem, especially in scenarios where some of these ISVs may not have a native integration and/or formal collaboration with one another. Lohr noted that KPMG “needs to show up understanding how complicated multiparty relationships work before showing up and working them out ad hoc at the client.” That direct acknowledgment of the challenges inherent in multiparty alliances is decidedly not something TBR consistently hears from KPMG’s peers and partners.

KPMG moves away from vendor agnosticism

One of the most important takeaways for TBR from the summit was KPMG’s willingness, in the right circumstances, to aggressively abandon the typical agnostic approach to recommending technologies and instead make a specific technology recommendation where there is a deep understanding of the client needs. One client example highlighted this new(ish) approach. When the client reached out for advice on a sales-enablement platform, KPMG did not take an agnostic approach and, instead, told the client Salesforce was the only choice, based on KPMG’s evaluation.

Part of KPMG’s proposal rested on reworking the client’s processes so Salesforce could work as much out of the box as possible, limiting costs and customizations. As KPMG leaders described it, this reflected the opposite of most consultancies’ (and enterprises’) usual approach of forcing the business processes to work with a new technology. In a competitive bidding process, the lead KPMG partner, according to the client, answered questions on the Salesforce software and implementation issues without turning to others on the KPMG team, demonstrating mastery of Salesforce and the client’s IT environment that reassured the client about KPMG’s recommendations. Further, the client expressly did not want customization layers on top of Salesforce, knowing that would be more expensive over time.

Notably, the “fairly comprehensive implementation,” according to the client, took less than a year, including what the client said was “a lot of investment with KPMG in change management.” Recalling best practices TBR has heard in other engagements, the client team and KPMG called the Salesforce implementation Project Leap Frogs to avoid the word “transformation,” enabled champions across the enterprise, and held firm to the approach of making minimal customizations. In discussions with TBR, KPMG leaders confirmed that not being technology agnostic was contrary to the firm’s usual practice but was becoming more common.

Reinforcing that notion, a KPMG leader told TBR that the firm had lost a deal after it recommended Oracle and said SAP was not the right fit. The client selected SAP (for nontechnical reasons) but later awarded, without a competitive bidding process, Oracle-specific work to KPMG after noting respect for the firm’s honesty and integrity.

KPMG showcases client-centric innovation in action

ServiceNow implementation

A client story featuring a ServiceNow implementation that brought cost savings and efficiencies to the client notably emphasized change management, a core KPMG consulting capability that is sometimes overshadowed by technologies. The client described the “really good change management program that KPMG brought” as well as the emphasis on a clear data and technology core, out-of-the-box ServiceNow implementation, and limited customizations. In TBR’s view, KPMG’s approach with this engagement likely benefited considerably from the firm’s decades-long relationship with the client, playing to one of KPMG’s strengths, which the firm’s leaders returned to repeatedly in discussions with TBR: Trusted partnerships with clients create long-standing relationships and client loyalty.

Reimagining leaders

One client story centered on a five-day “reimagining leaders” engagement at the Lakehouse facility, conducted by the KPMG Ignition team. Surprisingly, KPMG included an immersive session with an unrelated KPMG team working on an unrelated client’s project that had little overlap with the business or technology needs of the leadership engagement client.

According to the KPMG Ignition team, the firm showcased how KPMG works, how innovation occurs at the working level, and how KPMG creates with clients, giving them confidence in KPMG’s breadth and depth of capabilities. Echoing sentiments TBR has heard during more than a decade of visiting transformation and innovation centers, KPMG Ignition leaders said that being enclosed on the Lakehouse campus made it easier for clients to be fully present throughout the engagement and removed from the distractions of day-to-day work.

KPMG kept the client in the dark about what to expect from the engagement, which prevented any biased expectations from creeping in before the engagement had even started. KPMG Ignition leaders shared additional insights, noting that it was a pilot program for rising leaders at the client, providing an immersive experience that showcased the power of the KPMG partnership.

Throughout the five-day immersion at KPMG Lakehouse, participants learned how to apply the methodologies that fuel innovation at KPMG while staying focused on one theme: reimagining leadership of the overall company and of the participants as next-generation leaders, as well as reimagining leadership capabilities at every level of the organization.

KPMG equipped the client’s leaders with methodologies emphasizing storytelling, design thinking and strategic insights, and strengthened the client’s culture by fostering high-performing, collaborative teams.

One final comment from the Ignition Center leaders: This pilot program “highlighted the fact that AI can be viewed as a wellness play across the agency if you free up capacity and understand what can be achieved.” Based on the use case and sidebar discussions TBR had with KPMG Ignition leaders, we believe Ignition Centers continue to evolve, although the basics remain the same: Get clients into a dedicated space outside their own office, use design thinking, and focus on business and innovation and leadership and change, not on technology.

The art of the possible

A final client story, presented on the main stage, wove together the themes of AI, transformation and trust. The client, a chemicals manufacturer and retailer, said KPMG consistently shared “what’s possible,” essentially making innovation an ongoing effort, not a one-off aspect of the relationship.

The client added that his company and KPMG had “shared values … and we understand each others’ cultures,” in part reinforced by KPMG dedicating the same team to the client during a multiyear engagement.

In TBR’s view, KPMG’s decision to highlight this client reinforced everything KPMG leaders had been saying during the summit: Relationships, built on consistent delivery and continually coming to the client with ideas and innovations, plus a commitment to the teaming aspect of the engagement, are KPMG’s superpower. Notably, this client was not a flashy tech company, a massive financial institution or a well-known government agency, and the work KPMG did was not cutting-edge or highly specialized but rather core KPMG capabilities — in short, what KPMG does well.

Velocity and GenAI: KPMG’s client-first approach to AI adoption and transformation

KPMG dedicated the second day of the analyst summit to AI, a decision that reflected the firm’s overall approach: Business decisions come first, enabled by technology. Supporting the firm’s AI strategy, KPMG has developed Velocity, a knowledge platform, AI recommendation and support engine underpinned by one universal method that pulls together every capability, offering and resource across the firm for the KPMG workforce. According to KPMG leaders, Velocity reinforces the firm’s multidisciplinary model and will become the primary way KPMG brings itself to clients.

In addition to sharing knowledge across the global firm, Velocity will help KPMG’s clients find the right AI journey that matches their ambitions — whether it be Enterprise, Function or Foundation — by allowing them to select a strategic objective they are trying to achieve, which function(s) they want to transform, and which technology platforms they want to transform on. The platform also reaffirms the firm’s acknowledgment of data’s role in AI. In fact, part of the rationale for Velocity was bringing the data modernization and AI business together while maintaining a focus on a sole client outcome. This means KPMG does not care whether customers build their data foundation with a hyperscaler or internally; as one leader in the AI Journey breakout session said, it is just about “helping clients do what they want to do.”

Velocity includes preconfigured journeys based on specific client needs, as developed, understood and addressed in all of KPMG previous engagements. Similar to many consultancies, KPMG begins engagements by developing an understanding of clients’ strategic needs and issues, rather than their technology stack. (TBR comment: easy to say, hard to do, especially when a firm has practices built around specific technologies).

Velocity is designed to add value to client engagements (including describing, calculating and being accountable). It will also bring a “tremendous amount of information” and is “highly tailorable,” according to a KPMG leader, who also noted that the platform’s adoption, use and usefulness over time will be key. KPMG leaders said the core aspects of AI — even agentic AI — are all the same, separated only by planning and orchestration. For example, KPMG’s AI Workbench underpins how it is bringing agents and AI-enabled services to its clients and its people. Velocity, then, is a KPMG offering where every step is focused on achieving client outcomes, which comes back to understanding clients’ key business issues, not simply their technology stack.

The launch of Velocity internally (starting in March 2025) into its largest member firms brings to life KPMG’s approach to AI. KPMG expects its member firms to be able to start unlocking the power of Velocity beginning in May, and will launch Velocity externally later in the year. Amid caution on the client side around the adoption and implementation of AI technologies, KPMG’s David Rowlands, head of AI, discussed how KPMG wants to be client zero around AI, helping to ease clients’ ethics and security concerns by working through experimentation and into adoption and scale. Rowlands highlighted the firm’s attention to knowledge and need to fully benefit from AI. Training around AI, including the definition of AI and how to use it; creating trust within AI; and learning effective AI prompts also fit within this strategy, enabling both KPMG and clients to effectively embed AI across people and operational strategies.

Velocity, AI and the future of audits

Three other AI-centric comments from KPMG leaders stood out for TBR:

- With AI, “the road to value is paved with human behavior and change,” according to Rowlands, reflecting the firm’s emphasis on the business over the technology and the importance of change management — a core KPMG consulting strength.

- Rowlands also noted that AI is a critical national infrastructure, dependent on energy, connectivity and networks, and should be considered a national investment priority and national security issue. In TBR’s view, framing AI this way — not as just a tool or another service to be sold — adds credibility to KPMG’s AI efforts.

- According to Per Edin, KPMG’s AI leader, “ROI is clear and documented, but still not enough adoption to be as measurable as desired.” In TBR’s view, Edin’s sentiments track closely with TBR’s Voice of the Customer and Voice of the Partner research, which have repeatedly shown that interest and investments in AI have outpaced adoption, particularly at scale.

In a breakout session, KPMG walked through the firm’s well-established KPMG Clara platform, a tool designed to help the firm complete its audits more quickly and accurately. In essence, KPMG creates a digital twin of an organization, reflecting the firm’s understanding of where AI can be applied. KPMG Clara Transaction Scoring enables auditors to deliver what the firm calls “audit evidence” and note “outlier” transactions. According to KPMG leaders, “AI agents perform audit procedures and document results for human review, just like junior staff.”

Critically, KPMG Clara audits every transaction, not just a sample of transactions, increasing the likelihood of catching problems, issues and outliers. By flagging high-risk transactions, KPMG can deploy professionals to focus on solving real problems rather than adjudicating false positives or meaningless issues. In TBR’s view, this represents the proverbial “higher-value task” long-promised by robotic process automation, AI and analytics.

When pressed by TBR, KPMG leaders said clients were not looking for rate cuts but rather for higher-quality audits and new insights into their operations. Importantly, clients also expect to spend less time on an audit, freeing up professionals’ time: The client can do what they do, and KPMGers can stay focused on reflected issues and generate new insights.

TBR remains a bit skeptical, but if clients do not expect a rate cut when KPMG deploys AI to speed up the audit process and instead expect to spend less time internally on what should be a higher-quality audit, TBR considers that a fantastic way to position AI while also reducing KPMG’s professionals’ time. There are two unanswered questions: What happens to the apprenticeship model, in which less-experienced KPMG professionals learn the art, not the science, of audit? And, in a few years, will 95,000 professionals conduct 400,000 audits (twice the current number) or will 50,000 professionals (half the current staff) complete 200,000 audits?

Regardless, as the company rolls out internally developed generative AI (GenAI) tools, the learning and experience captured through the firm’s implementation and change management process will undoubtedly be integrated into customer engagements involving third-party solutions. With SAP and Salesforce in attendance, KPMG zeroed in on each vendor’s AI strategy and how the firm plans to support it. To focus on Salesforce, Lohr echoed Salesforce CEO Marc Benioff in calling Agentforce the most successful Salesforce launch ever, which suggests a recognition from KPMG leadership of Salesforce’s agentic AI strategy.

For its part, KPMG highlighted the recent launch of an Agentforce Incubator, an experimental experience that can be delivered to clients from any location — a client site, Salesforce event or a KPMG office — to ignite the ideation stage and begin exploring the road map from proof of concept to production. During one-on-one conversations, TBR explored KPMG’s view of its role in the agentic AI, and we found it to be both pragmatic and valuable — similar to how the firm must be opinionated in broader digital transformation engagements.

KPMG’s journey to becoming an AI orchestrator will require the firm to take a stance on a vendor-by-vendor basis and arrive on-site with a preconceived understanding of the best path forward for clients given their goals. In addition to having an opinion, KPMG also recognizes it must help facilitate the road maps it lays out to clients, which will involve a heavy change management component, as well as a more technical design and development element. With the Agentforce example, once a targeted business outcome is established, an AI agent needs to be designed and developed to achieve the outcome. In many cases, a customer may lack the internal technical resources necessary to build the agent and tackle the problem. As KPMG avoids vendor agnosticism, the company can focus on building out technical resources with the vendors it chooses, building deeper benches with technical training associated with its strategic partners.

KPMG’s Lakehouse offers unique setting for analyst event

As it did less than 18 months ago when KPMG broke from the traditional analyst event style, the firm did it again. Hosting 62 analysts and dozens of global executives, clients and partners at a flagship Lakehouse facility for two days of both formal and informal interactions, presentations, client use cases and demos, KPMG demonstrated agility in terms of the delivery and engagement format, yet, with a steady hand, continued to execute on its vision with its global solutions — Connected, Powered, Trusted and Elevate — and proven IP, methods and enablers coming together through Velocity.

KPMG held one-on-one sessions between analysts and executives midway through the first day so that executives were present and engaged. Additionally, KPMG saved the all-about-AI-and-nothing-else sessions for the second day, which came off as, “We get AI is important, but we are also realistic and keeping our heads on straight and not being ‘me too, me too’ about AI.” KPMG senior executives sat in on both the client case study and platform breakout sessions. Subtle message to analysts: This stuff matters enough across the firm to be worth KPMG partners’ time even if it is not in their area.

Conclusion

As a member of the Big Four, KPMG has brand permission and a breadth of services that are relevant to nearly every role in any enterprise. As the firm executes on its Collective Strategy, TBR believes KPMG will accelerate the scale and completeness of its offerings, building on a solid foundation and expanding the gaps between KPMG and other consulting-led, technology-enabled professional services providers.

KPMG’s global solutions — Connected, Powered, Trusted and Elevate — which resonate with clients and technology partners, have now been brought together into one transformation framework under KPMG Velocity, providing KPMG’s professionals with clear insight into the firm’s strengths and strategy, and underpinning, in the near future, all KPMG’s transformation engagements. KPMG Velocity’s evolving strategy will challenge KPMG’s leaders to execute on the promise of that transformation during the next wave of macroeconomic pressures, talent management battles and technology revolutions. At the same time, KPMG’s leaders recognize that their priorities are transforming the firm’s go-to-market approach, unlocking the power of the firm’s people, reimagining ways of working, and innovating capabilities and service enhancements.

Success in executing these priorities, in TBR’s view, will come as KPMG shifts from building a foundation to scaling alongside the growing needs of its clients and as the era of GenAI presents yet another opportunity and challenge. Striking the right balance between elevating the potential of GenAI as a value creator and accounting for commercial and pricing model implications will test the durability of KPMG’s engagement and delivery frameworks.

Although the firm has placed in motion many of the aforementioned investments over the past 12 to 18 months, the one opportunity that is changing relates to speed. As one enterprise buyer recently explained to TBR: “GenAI will force all services vendors to change. The [ones] who [will] be [the] most successful will be [those] who do it fast.” With speed comes risk — which KPMG fully acknowledges and is why KPMG Velocity’s offering is a differentiator for the firm in the market. With KPMG Velocity, all of KPMG’s multidisciplinary and heritage risk and regulatory considerations have been embedded across each transformation journey to ensure clients can remain compliant and avoid the pitfalls that can often arise during transformation.

Special report contributors: Catie Merrill, Senior Analyst; Kelly Lesizcka, Senior Analyst; Alex Demeule, Analyst; Boz Hristov, Principal Analyst