EY Reimagines Global Mobility: Human-centric, Tech-enabled and Business-critical

EY Global Mobility Reimagined 2025, Barcelona: Over two days in Barcelona, EY hosted more than 150 clients and a few industry analysts for its first in-person EY Global Mobility Reimagined conference since 2019. During the event, TBR spoke with EY leaders, EY technologists and EY clients from a diverse set of countries and industries. Nearly all the client attendees serve within their enterprise’s talent, mobility or human resources organizations, and a common vibe throughout the event was the changing challenges facing HR professionals. The following reflects both TBR’s observations and interactions at the event and our ongoing research and analysis of EY. Three themes emerged over the two days of the conference, both from the presentations and in discussions with EY leaders and EY clients. First, rapidly changing technology, particularly AI, permeates every aspect of mobility, but the EY leaders and conference attendees returned repeatedly to the need to keep humans at the core. Second, EY did not emphasize or sell what EY can do but rather kept the focus on clients’ problems. A Tech Connect showcase featured cool new EY software and solutions, but the conference plenaries and breakout sessions never veered into a sales pitch for EY’s solutions. Third, in discussions with EY leaders, TBR heard a clear strategy for continued rapid growth and evolving technology alliances, underpinned by a commitment to managed services.

Humans remain central to mobility, although technology can help

Mobility — moving talent around the world on short- and long-term assignments — is inherently stressful for the employee and risk-inducing for the employer, so while technologies can improve the processes and mitigate risks, the experience remains a human one. Even with generative AI (GenAI) and agentic AI, everyone strives to keep humans fully at the center. Three moments during the conference highlighted this theme.

During a breakout session focused on emerging technologies, EY noted that nearly all current mobility-focused technologies and platforms have been designed around corporate requirements and policies. In the near future, technologies will be designed around the employee experience. EY’s new Microsoft Teams app for mobility, described below, provides an example of that shift. Second, during a panel discussion about the ethical concerns around AI adoption, one EY leader noted that even if agentic AI and other tools replace many of mobility professionals’ day-to-day tasks, nothing can replace the human touch, especially during a stressful time like an international relocation. Once again, the technology must enhance the employee experience. Lastly, EY professionals noted during a breakout panel on immigration that employers have had a mindset shift with respect to permanent residency.

Previously, employees tried to shift their residency status in a foreign country without assistance from their employer, reflecting employers’ concerns that once established in a country, those employees would be inclined to stay, perhaps necessitating a split with the employer. In some countries — Saudi Arabia was cited as a prime example — annual visa and work permit renewals are both expensive and stressful. Over a long-term assignment, paying for an employee to gain permanent residency could be cost effective and, by demonstrating support and bringing corporate resources to bear, could increase employee retention. Happy employees who stay longer and build better relationships with clients lead to better returns for the company on its investment in talent and mobility.

The event offered a forum for clients to discuss challenges and how they are coping

EY sold without selling. Every session included EY partners describing the firm’s views of the challenges facing mobility professionals and HR teams overall, but, with the exception of the Tech Connect showcase, EY’s capabilities were not front and center. In the majority of sessions, EY’s capabilities and the firm’s ability to help address those challenges simply did not come up. The EY partners focused on setting the overall parameters of the discussion, providing context around the challenges clients face, and then allowing those clients to engage with EY and with other clients about how they are coping.

In TBR’s view, this approach separates EY from peers while also reflecting the ethos TBR has observed at other EY events, such as the Strategic Growth Forum. EY provides clients a comfortable space to talk about issues and commiserate, without a hard EY sell. One attendee told TBR the conference made him feel better because his problems were not nearly as dire as the other professionals he spoke with during the event.

Navigating GenAI: Insights, Strategies and Opportunities for 2025 — Watch “2025 Predictions for GenAI” on demand now

Mobility practice serves as the glue across EY globally

In sidebar discussions with TBR, EY leaders’ comments reinforced two trends about Mobility — and the firm’s People Advisory Services – overall. First, People Advisory Services is growing ahead of the overall firm. The practice has been investing in managed services capabilities and scale, with an appreciation that noncommoditized managed services will be a significant component of People Advisory Services revenues. Second, Mobility remains an essential part of the glue that keeps EY operating as a global firm serving global clients.

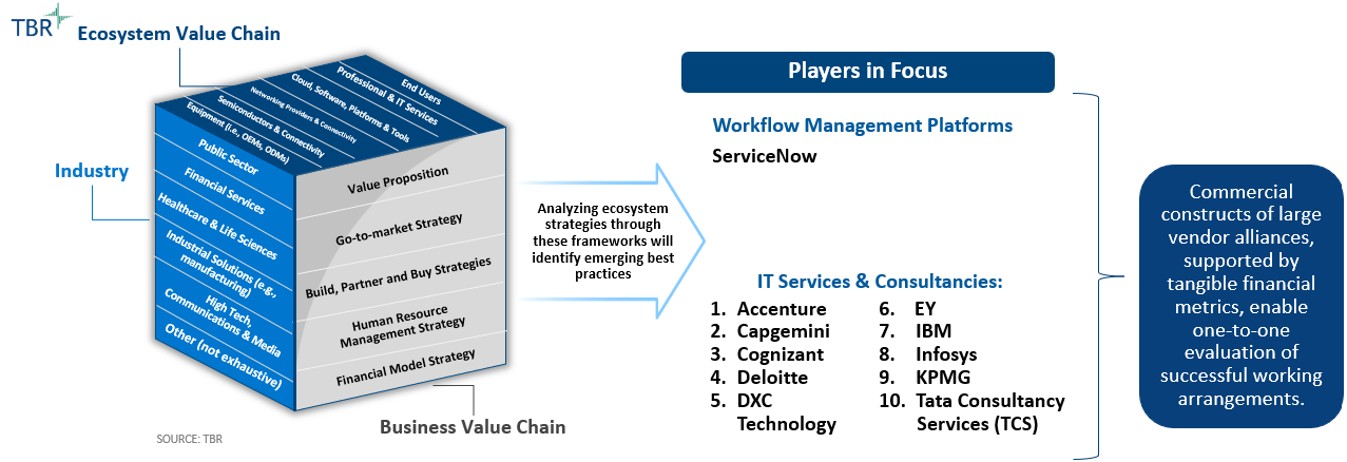

In addition, during the event TBR noted a clear emphasis on Microsoft as a strategic partner, but EY noted expectations that other technology alliance partners, including ServiceNow, will become increasingly strategic to Mobility. Regarding the third point, Mobility services have challenged other Big Four firms, in part because — as practiced by EY — the burdens include forced cross-border coordination by and shared resources from separate member firms, managing a plethora of niche providers and technology partners, and deploying a software business model.

Countering those burdens, Mobility can serve as a centripetal force, helping align EY’s Tax, Audit and Advisory practices and giving additional weight to the firm’s global capabilities (and leadership, notably). As a complementary service to consulting or tax, Mobility advances EY’s client retention strategies, particularly with its largest clients. Internal benefits and external rewards. Win- win.

Emerging technologies begin to permeate HR

AI could not be ignored, in part because the notion of agentic AI-related disruption was an underlying current throughout the event. While not diminishing the challenges of adopting emerging technologies, EY professionals repeatedly stressed the need to adopt soon, smartly and with a long-term plan in place. In a breakout session, EY professionals used a now/next framework to describe a few trends in emerging technologies (including the corporate requirements and employee experience described above).

Currently, HR professionals and employees must wrangle with multiple technologies and platforms to execute on mobility challenges; in the near term, everyone will enjoy streamlined technologies with cohesive data-sharing strategies. Today, HR professionals rely on dashboards to provide analytics on mobility and other People Advisory Services issues, but soon predictive AI-driven analytics will provide insights and quicker decision making, with fewer (or perhaps no) dashboards.

Notably, when surveyed during the breakout session, the majority of HR professionals in attendance opted for “streamlined technologies” as their top priority. In a separate session, client attendees said their greatest expectation from AI would be data analytics and enhanced reporting.

A few other technology-centric comments and observations from the event:

- EY partners said Mobility professionals did not need to wait for the next GenAI update or release — the technology needed is here and can be applied now.

- Previous notions about data complexity may be outdated as the technology exists now to handle that complexity — HR professionals should focus on what they want to accomplish, not whether their data is perfect. (Side note: in the same discussion, EY partners observed that the biggest roadblock to adoption remains the availability of quality data.)

- The ethics around GenAI remain … murky. EY partners noted the environmental impact of energy-hungry data centers and suggested a gap exists between innovation and accountability, eventually cautioning for a go-slower approach to AI adoption.

Overall, technology played across every aspect of Mobility with the common theme around enhancing the employee experience and measuring how EY’s Mobility practice can benefit a company’s strategy and employee retention, and even improve relationships with clients. In short: use technology wisely, with help from EY.

EY integrates mobility management into Microsoft Teams

The Tech Connect showcase included nine solutions, most notably EY Mobility Pathway, a corporate mobility management tool; EY Mobility Carbon Tracker, a customizable tool for scenario planning and carbon footprint measuring; and new Microsoft Teams app for mobility, a seat-based SaaS offering deployed as an application on Microsoft Teams. The last one stole the show. Employees do not need to log on to another platform, remember another password or navigate an unfamiliar app, but rather add the new Microsoft Teams app for mobility app to their Teams experience. The software can be configured to clients’ specific mobility needs, such as shipping dates, travel, housing, tax and other elements of the international relocation journey.

The new Microsoft Teams app for mobility looks and feels like a Teams app, has all the employer data, and seamlessly — as the employee experiences it — pulls in data and information from the third-party providers the employer uses, such as shipping companies or short-term housing agents. EY partners explained that new Microsoft Teams app for mobility is currently live with a few clients, and will go live soon across more of EY.

In TBR’s view, EY made a significant strategic decision in embedding new Microsoft Teams app for mobility into Microsoft Teams and not creating a separate employee-centric dashboard. This keeps employees in an environment they are already comfortable working in and avoids additional stress during a difficult time. EY’s commercial model for new Microsoft Teams app for mobility requires the firm to invest in software support and maintenance capabilities, but feeds into the firm’s overall managed services play.

Immigration rises to C-level topic

An immigration session resonated with TBR, in part because the TBR principal analyst in attendance once stamped visas at a U.S. embassy, but also because of the political issues that were openly discussed. EY partners noted that 2024 was a “super year” for elections globally, and immigration issues featured prominently in election politics in many countries. Extrapolating to global enterprises, EY partners made a convincing case that immigration has become a boardroom issue. EY’s Batia Stein and Chris Gordon noted that chief human resources officers (CHROs) and other executives surveyed by EY said the top option for solving talent gaps is moving talent where it is needed, no matter where on the globe — so, mobility.

As described above, assisting with permanent residency can alleviate some employee stress and enhance client and employee retention. In addition, using technology to enhance the employee mobility experience is not simply the right thing to do for employees; EY also believes the Mobility practice can be a business driver. And at a time when compliance issues have become more frequent and fraught, exacerbated by immigration raids and joint immigration and tax audits, Mobility can be a business driver for EY, too.

People Advisory Services global infinity loop reflects EY’s approach to clients’ issues

EY has a visual of its People Advisory Services Tax practice that features an infinity loop with People Advisory Services on one side and People Managed Services on the other, with all the related offerings creating an endless cycle of services, surrounded by EY’s other practices and offerings, such as Strategy & Transactions and Sustainability. The infinity loop helps understand EY’s positioning of its services and, perhaps more importantly, reflects EY’s understanding of its clients’ needs and challenges.

Companies keep recruiting, hiring, paying, rewarding, moving, repatriating, retiring and hiring in an endless loop, and EY has capabilities — including consulting, tax and software — that can accelerate movement around that endless loop. EY did not need to say that at the Global Mobility Reimagined conference, as clients understood it already. EY also has a stated ambition to grow People Advisory Services to more than $3 billion by 2031. Absent the worst possible global political and economic scenarios, including a drastic curtailment of global mobility, TBR believes that ambition is perhaps a bit too modest.