Will Boomi’s strategy succeed with new management?

It is always hit or miss whether a blog post will solicit dialogue from readers. TBR’s recent blog post Who is going to want Boomi? certainly struck a chord. The blog focused on the actions of the private equity firms intending to acquire Boomi, which ultimately led Boomi to provide TBR with deeper insight into its most recent achievements, activities and aspirations as the company moves to new corporate ownership. Boomi has a sound growth strategy with a high chance of success, assuming the company and its new owners are in strategic alignment.

Evaluating the business using an inside-out/outside-in construct provides a reasonable framework for the market implications Boomi ― and really any integration PaaS (iPaaS) vendor ― will face in the years ahead. The situation starts with a universal fact: Digital businesses gain a competitive advantage against peers if they automate the flow of data across their organization. Any step where a business has to add labor when a peer does not is a cost disadvantage. In this respect, Boomi’s value is twofold: 1) automations can be built into the process and tightly integrated so that they don’t break as applications evolve, and 2) organizations can create even greater advantage when they are discovering data from all of their sources and understand the data and applications involved in the automation process.

Outside in: The rise of data management and asymmetric competition

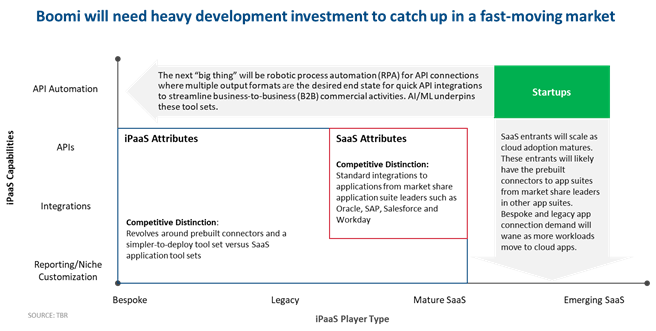

Our initial blog on the sale of Boomi referenced UiPath and startups Kong and Entefy as potential asymmetric challengers to Boomi’s core value proposition. Additionally, you have the basic PaaS offerings from the exascale cloud platforms providing prebuilt connectors and myriad additional services for security, data protection and data management. SaaS players, as mentioned in our prior blog, offer prebuilt integrations to popular, adjacent applications. Numerous vendors vie for what they generally call single-pane-of-glass management in multiple forms, with all vendors stressing analytics and automation in some manner.

Just as paramount is the economywide war for talent. Qualified talent versed in new technologies and tools are sought virtually everywhere, making it an employee’s market. As is the case with any acquisition, talent retention and recruitment will be key to the innovations Boomi has charted out in its development road map. In acquisition parlance, it is called putting “the golden handcuffs” on essential personnel to ensure they do not jump to a competing firm. Locking down key engineering talent will be critical.

Situationally, iPaaS tool sets can be acquired either in best-of-breed fashion or by standardization on one platform that is expansive enough to solve an immediate need and evolve with the organization. In large enterprises, there could be a mix of tools based on those brought into the organization via acquisition. In this way, iPaaS brands can be pigeonholed for what they have been offering and not necessarily given consideration for their go-forward innovations. In turn, tool purchases are often a derived decision as part of a broader initiative. The cost is justified in terms of the time savings for the business initiative rather than how the purchase will make the life of the IT department easier.

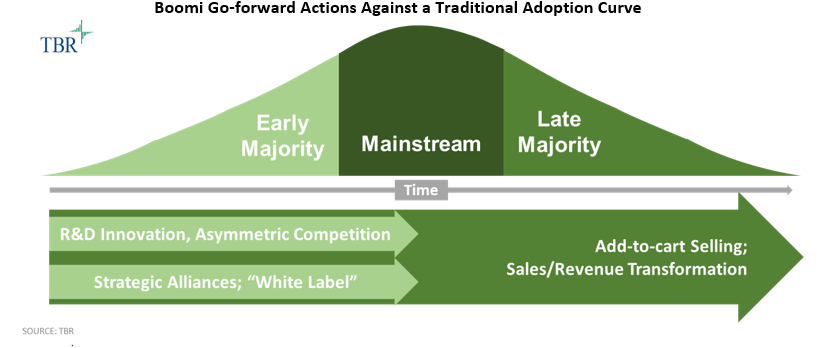

It is for this reason Figure 1 references “Strategic Alliances; ‘White Label.’” Externally, many global systems integrators (GSIs) are pivoting to managed services offerings, especially the advisory firms with deep tax and audit credentials, whose distinction comes from the tax and audit knowledge base they can automate to address data management, governance and compliance rules.

By underpinning GSI software development with its own tools, Boomi can gain a distinct selling advantage into large enterprises as it will have these influencers and quasi sellers at its disposal. Tighter relationships will also help Boomi keep an ear to the ground on the emerging technology vendors that GSIs and early adopter enterprises are considering and those that pose an asymmetric threat to the Boomi core.

Furthermore, Boomi made clear it does not aspire to substantially grow its consulting and services operations. GSIs will find this clear swim lane delineation refreshing considering the ways in which traditional services and software firms are beginning to encroach on one another’s core offerings.

Inside out: Transforming direct selling and creating new demand through ‘add to cart’

As a technology firm selling technology to IT departments, Boomi has sound, traditional selling motions. Increasingly, however, we hear the clarion call of selling business outcomes, and that move to consultative selling to lines of business will be necessary, given technology matters less and less while people and process matter more. In turn, studies show buyers want to self-research products and then self-provision those products from online portals.

Boomi has made steps in that regard with the availability of its AtomSphere Go edition, which aims to give customers a frictionless buying experience, at an early entry price point of $50 per month. AtomSphere Go also gives Boomi a way to disaggregate the various services in the existing offer to allow Boomi to move down market to reach late-majority enterprises. Additionally, Boomi recently announced AtomSphere Go is available on Amazon Marketplace, the mecca for seamless, add-to-cart ordering.

That type of selling, often called “land and expand,” has a very different set of operating best practices than traditional direct, or blue suit, selling. The aspiration of this kind of selling is lifetime customer value (LCV). It requires a different type of telephone support that is part technical advisory and part consultative selling for cross- and up-sell opportunities with smaller enterprises.

It is also a business model where revenue and expense do not align to the 90-day quarterly reporting cycle. This requires a leap of trust to embark on such selling approaches, as costs will far outweigh revenue until scale is achieved and the “flywheel effect” kicks in. For startup operations it is a very prominent challenge, and for Boomi the challenge will come more from setting up the operations with different motions and finding a way to balance investing in selling motions with awaiting payoff of the new add-to-cart operations.

Situation analysis: Never confuse a clear view for a short distance

TBR has laid out Boomi’s situation analysis levers as 1) talent retention and ongoing innovation to continue evolving a traditional space (iPaaS) that is being encroached upon by startups and established vendors on all sides, 2) heightened partner selling, and 3) a challenging shift to the add-to-cart selling model primarily to move down market, which requires fiscal patience. Provided there’s a vision match with the new owners, Boomi has solid platform depth and breadth with a reasonable innovation road map to survive and thrive in this ever-accelerating business pivot where automating data management, seamlessly moving data and empowering the right users to engage with data are paramount to maintain a persistent competitive advantage no matter the standard industrial classification (SIC) code.

So, what do you think? Will Boomi’s strategy succeed with new management?

In TBR’s newest blog series, What Do You Think?, we’re sharing questions our subject-matter experts have been asking each other lately, as well as posing the question to our readers. If you’d like to discuss this edition’s topic further, contact Geoff Woollacott at [email protected].