TBR dives into predictions about generative AI and its very real disruptions: Today, organizations are exploring ways to leverage GenAI to optimize how they operate the front lines of their customer service processes via contact centers, an industry that currently employs over 11 million individuals; and tomorrow, the technology will provide an alternative to enterprises’ IT departments, which must frequently tap third-party services partners for custom software development, a market that was valued at nearly $25 billion in 2022.

GenAI Will Optimize All Workflows and Disrupt Those Who Ignore It

Generative AI (GenAI) has supplanted yesterday’s technologies, such as the metaverse, in today’s global conversation, quickly blossoming into a new hype machine for the industry to follow with curiosity. While other recent hype cycles have failed to deliver, is this the one that will deliver on its promises? The short answer is yes. It will not be immediately used in the ways that sci-fi thrillers would lead you to believe, but business leaders across every industry will deploy AI to augment today’s workforce by eliminating mundane tasks, freeing up workers for high-value tasks and doing away with certain roles in the workforce over time. In fact, business leaders who fail to make GenAI deployment a strategic priority risk weakening their competitive position as peers streamline productivity and expand profitability, while at the same time improving employees’ experience by reducing the administrative burden.

Reflecting on early development, TBR predicts GenAI will bring about two very real disruptions:

- Today, organizations are exploring ways to leverage GenAI to optimize how they operate the front lines of their customer service processes via contact centers, an industry that currently employs over 11 million individuals.

- Tomorrow, the technology will provide an alternative to enterprises’ IT departments, which must frequently tap third-party services partners for custom software development, a market that was valued at nearly $25 billion in 2022.

These disruptions were brought to light following an interview TBR conducted with a technology leader, which is covered at length later in this report. The industry practitioner, Alexander Titus, vice president at Colossal Biosciences, stated that the use of OpenAI’s GPT-4 had immediately resulted in a 10% productivity increase across his software development team. Titus noted that as the technology matures, “we will all become code reviewers and writing editors, instead of raw generators.” Individuals who have used the technology may understand his sentiment. Today’s technology incumbents certainly have responded in a way that would lead one to believe that GenAI’s disruptive potential is real.

Technology Giants Invest Billions to Join the Race to Deliver GenAI

Technology vendors are recognizing the differentiated value GenAI capabilities can provide to augment their core offerings. Many of the largest cloud providers are making significant investments to capitalize on the opportunity, led by Microsoft (Nasdaq: MSFT), which has made the biggest splash. In January 2023 Microsoft invested another $10 billion into ChatGPT owner OpenAI, expanding its collaboration with the AI leader and earning Microsoft preferred cloud delivery rights. Through this agreement, GPT-4’s diffusion across Microsoft’s portfolio has already begun, affording Microsoft an advantage for the moment. Competitors, such as Google Cloud (Nasdaq: GOOGL) and Amazon Web Services (AWS) (Nasdaq: AMZN), must develop GenAI capabilities elsewhere, through either internal R&D or partnerships and acquisitions.

Microsoft Emerges as GenAI leader as it Infuses GPT-4 Across Core Catalogs

Microsoft has been involved with OpenAI since 2019, when Microsoft announced a $1 billion investment and agreed to collaborate on a platform to create and run new AI tools. Recently, the two organizations greatly expanded their partnership, with OpenAI awarding Microsoft preferred cloud delivery rights and Microsoft investing $10 billion. For Microsoft, the partnership represents a stake in the current GenAI leader and pioneer. Since the agreement, Microsoft has been busy embedding GPT-4 across its platforms through an expansion of its AI assistant, Copilot. Previously, Copilot served GitHub users by providing code recommendations and generation, much like GPT-4’s targeted use case.

Now, Microsoft intends to embed GPT-4 within Copilot, supercharging its capabilities, then deliver the feature across Microsoft’s portfolio of applications. Content generation represent one of the greatest emerging use cases for GenAI, and Microsoft’s leadership in productivity and developer platforms, through Windows 365 and GitHub, will complement this use case well. In addition, Microsoft will deliver the Azure OpenAI Service, allowing enterprises to design and train GenAI models around their proprietary data and workflows. With cost becoming a more prevalent customer concern, the efficiency gains from automated workflows will become more attractive, supporting adoption in the short to medium term. Controlling preferred rights to a leader in this space, Microsoft stands to benefit significantly from this demand.

Google’s History in AI and Machine Learning (ML) Will Benefit Product Development

Backed by Alphabet’s AI-first strategy, Google Cloud has opted to invest in internal R&D. The company has long been viewed as a leader in AI, known for funding research and contributing open-source projects. In fact, the Transformer architecture, a core component of large-language models like generative pre-trained transformers (GPTs), was largely pioneered by Google and released to the public. Additionally, from a cloud standpoint, the Google Cloud AI, Vertex AI and TensorFlow platforms are viewed highly within the AI community, and TensorFlow has developed a solid following since being made open source in 2016. Like OpenAI, Google has been working on large-language models and regularly touts the capabilities of its LaMDA (Language Model for Dialogue Applications) model.

To address ChatGPT specifically, Google has released Bard, a LaMDA model tailored to support similar use cases, such as question answering, text summarization, content generation, and AI-powered search. However, early reports have favored ChatGPT-4’s capabilities relative to Bard, which surely has Google growing its R&D budget for GenAI. TBR expects Google’s experience in the area will pay dividends in product development, allowing the company to remain competitive long-term. Still, with CEO Sundar Pichai declaring a “code red,” which will redirect developer teams toward GenAI tools, it is possible GenAI, specifically ChatGPT, poses one of the greatest competitive threats Google has navigated in a long time.

AWS responds to Microsoft by announcing Bedrock and CodeWhisperer updates

As TBR worked to publish this report, AWS burst into the GenAI conversation with several announcements around solutions that will allow enterprise customers to access this technology on its platform. AWS’ new cloud service, Amazon Bedrock, takes a blended approach relative to Google and Microsoft by offering foundation model services powered by ISV partners — initially AI21, Anthropic and Stability AI — and the company’s internally developed large-language models, referred to as Amazon Titan. These services will compete directly with Azure OpenAI Service by providing enterprise customers with a platform to develop GenAI tools tailored to their specific workflow needs. Additionally, the company also responded to Microsoft’s development of Copilot X with new CodeWhisperer updates that will embed GenAI functionality into its existing code development platform.

Will GenAI be Another Emerging Technology that Gets Stuck in Pilot Mode?

While ChatGPT is impressive, hype cycles in technology are frequently traps that obscure the true disruptive power of innovation, presenting overblown scenarios that fail to come to fruition. Industry 4.0, for instance, provides a great example of a highly regarded trend that, at least within the originally anticipated timeline, failed to deliver on the promised outcomes. Originally dubbed Industry 4.0 by a German government body in 2011, technologies like industrial IoT (IIoT) and automation have been touted as a mechanism for manufacturers to improve machine uptime, optimize output, and automate factories to improve operational efficiency and expand margins. However, the IIoT market has faced numerous development bottlenecks, many of which go beyond the required capital investment to either build net-new factories or retrofit existing factories with new technologies and machines to then digitize legacy processes.

For instance, a lack of standardization has prevented interoperability between the software layer and operational technology (OT), ranging from network protocols to data formats. As a result, organizations cannot simply deploy an intelligent manufacturing solution but must also modernize the adjacent first- and third-party systems included in the process. This is a costly endeavor that likewise necessitates new ways of designing ecosystems across the technology value chain, a dynamic that has delayed Industry 4.0 market development despite vendors converging on the opportunity for over 10 years.

Despite All Its Promises, GenAI Will Face Its Own Adoption Challenges

Unlike Industry 4.0, GenAI’s near-term use cases do not appear to face implementation challenges. Vendors like Salesforce can simply embed proprietary GPT capabilities across core offerings like Customer 360 and immediately grant their existing install base access to begin optimizing their productivity. For instance, in March 2023 Salesforce (NYSE: CRM) announced Einstein GPT for Commerce, a solution that provides tailored recommendations to users. Drawing from real-time data in Salesforce Data Cloud, the offering pairs Salesforce’s proprietary AI IP with ecosystem partners’ AI technologies. While this portfolio strategy allows vendors to more quickly proliferate GenAI in the market, it does not necessarily mean GenAI will smoothly sail into enterprise IT.

Data privacy, for instance, is already emerging as a problem for enterprise customers, evidenced by recent developments at Samsung. Three software engineers at Samsung reportedly pasted meeting notes and source code into ChatGPT, releasing confidential data and information to OpenAI. A core component of GPT models is their ability to train on input data to support its improvement, which means that enterprises looking to utilize the tool risk handing over valuable data to the AI provider. This will concern many enterprise customers, who will in turn demand privacy protocols that ensure confidential data remains private and protected. As a result of its mishap, Samsung announced its intentions to develop a GPT tool in-house to ensure security, and many large enterprises with the resources to fund such an initiative may follow a similar path unless data protection is ensured.

Outside of enterprise concerns, regulators in the European Union, which have long been stringent around data protections and sovereignty, may restrict the use of third-party GPT models. Italy has already banned the use of ChatGPT within the country, citing data protections as a core reason, and others may follow. More crucially, the nascency of GenAI will require vendors to clearly articulate potential use cases to drive adoption.

While content generation presents an easy-to-understand use case, deeper, specialized workflow automation will be more difficult to prove ROI on, as it will require greater time and money to tailor such automations to the enterprise. TBR believes this work, paired with previously discussed challenges such as data protection, will represent a significant consulting opportunity for the IT services community. Specifically, IT services firms possess not only the trust of buyers but also the knowledge of buyers’ businesses to educate clients and then help tailor GenAI tools to their business needs.

Biotech Pioneer Colossal Uses GenAI to Speed Up Woolly Mammoth’s Return

Despite GenAI’s nascency, business leaders are already recognizing the benefits of GPT-based tools, specifically GPT-4. In early April 2023 TBR spoke with Titus, who founded Bioeconomy.XYZ in 2020 after holding roles such as the head of Biotechnology Modernization for the Department of Defense and strategic business executive for Google’s Public Sector Healthcare & Life Sciences. Today, Titus is the vice president of Strategy and Computational Sciences at Colossal Biosciences, a genetic engineering and biosciences company that creates innovative technologies for species restoration, critically endangered species protection and ecosystem restoration but is most well known for its work to de-extinct the woolly mammoth, thylacine and dodo.

The discussion focused on the efficiency improvements Titus has seen using GPT-4. He stated that after his developer teams began using GPT-4 in their day-to-day workflows, he “immediately saw a 10% increase in my group’s productivity.” In one specific use case, which involved question answering and code generation, Titus had a “ML developer spend 45 minutes asking questions to GPT-4 about how to build a CRISPR (Clustered Regularly Interspaced Short Palindromic Repeats) ML model. He had no biology background but built a prototype, with example Git repos and prototype code, in 45 minutes. Without GPT-4, it would have taken him three days to do that.”

Titus continued, stating, “GPT-4 really takes the tedium out of things. I asked GPT-4 to connect to an open-source API, download the data, format the database and provide me with the documentation. It took 10 minutes.” After years of experience working with AI and ML across many disciplines, Titus today feels that “we will all become code reviewers and writing editors, instead of raw generators. As a practitioner of AI/ML, I cannot understate the impact of this technology.” And it is not just Titus; the productivity improvements he is seeing today are also being recognized elsewhere, such as in a recent study conducted by the Massachusetts Institute of Technology (MIT), which found that white-collar workers were able to complete tasks 37% faster using ChatGPT.

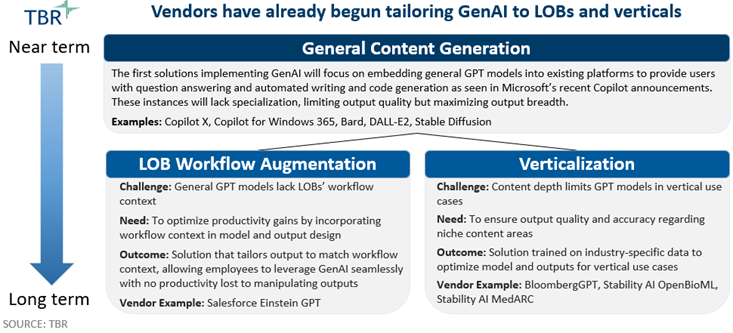

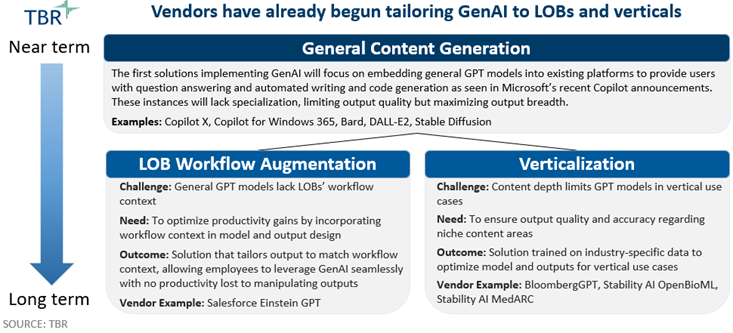

GenAI Is Already Being Tailored to Less Generic Enterprise Use Cases

The efficiency gained from GPT models drew attention quickly, and Reuters reported that ChatGPT has set the record for the fastest-growing user base among web-based applications.

This has translated into strong awareness among enterprise leaders, with many already allowing or even promoting the use of GenAI in day-to-day operations. The use of GenAI today reflects the early stages of GPT models, displaying a generalized model capable of serving a wide range of topics. However, as model training data and parameters broaden, the ability to support specific use cases worsens in quality and accuracy. This will drive future development around niche GPT models specialized by vertical and line of business (LOB). These models will be designed and trained around industry and workflow-specific data, optimizing functionality within a niche.

Content generation will be embedded in existing platforms

The first GPT-powered applications are focused on embedding GenAI tools into existing platforms to provide users with question answering and automated content generation, as seen with Microsoft’s recent Copilot developments. Copilot, which previously provided developers with code recommendations in GitHub using OpenAI’s Codex model, has been turbocharged using GPT-4, leading to the announcement of GitHub Copilot X. This update will improve upon the application’s existing code-generation capability, as well as bring new chat functionality to the command line and pull requests to support question answering. The move will massively benefit developers, enabling users to access the productivity improvements seen by Titus and MIT. Microsoft will bring the updated Copilot to Windows 365, where it will provide similar capabilities in writing-based workflows.

As GPT Models Are Tailored to LOBs, Client Contact Centers Will Face Disruption

Developing on top of the existing capabilities, platform vendors will work to embed GPT models within their workflows to accelerate user productivity and improve ROI. Salesforce, through Einstein GPT, is showing how this will be done. Einstein GPT will be able to ingest real-time data stored within Salesforce to generate content that is continuously updated to reflect current customer information, such as personalized sales emails, automated responses for customer service agents and auto-generated marketing materials, improving productivity across CRM roles.

In some specific cases, the tool can transform entire roles. Customer-service call centers, for instance, have already seen significant automation, and the improved functionality provided by GPT models will take automation even further. Industry estimates state call centers employ over 11 million workers globally. If the 10% productivity improvement seen by Colossal Biosciences can be replicated within this function, the industry would be empowered to reduce staff meaningfully without jeopardizing customers’ service experience, which businesses may be more inclined to do as they look to cut costs in the current economic environment.

As GenAI Matures, It Will Not Just Disrupt Unskilled Labor Markets

In the context of enterprise IT departments, consider the earlier provided examples in which Titus and his developer teams used GPT-4 to expedite code generation. Organizations have often stated that IT can become a bottleneck to their innovation efforts, as IT departments become overloaded with basic requests across LOBs, taking their time away from higher-value projects. To mitigate this, many organizations turn to the IT services community to help handle their workload, which may be as simple as developing APIs across the disparate third-party systems in hybrid IT environments to support specific business processes.

What if AI and automation technologies like GPT-4 could mitigate these challenges for enterprise IT? For example, what if GPT-4 could assist junior developers as they work through a large backlog of LOB requests, thereby allowing an organization’s top developers to focus on higher-value work? This outcome would result in less need for support from IT services partners on day-to-day IT challenges, particularly around custom software development, a market that generated nearly $25 billion in revenue according to industry estimates in 2022. As with any technological disruption dating back to the industrial revolution, new opportunities arise in the wake of economic revolutions, driven by technological advancement. In the context of GenAI, where will a new $25 billion market arise if custom software development engagements for IT services entities are displaced?

Nothing Found

Sorry, no posts matched your criteria