Oracle’s Full-stack Strategy Underscores a High-stakes Bet on AI

Oracle AI World, Las Vegas, Oct. 13–16: Newly rebranded as Oracle AI World, the theme of this year’s event was “AI changes everything,” a message supported with on-the-ground customer use cases in industries like healthcare, hospitality and financial services. New agents in Oracle Applications, the launch of Oracle AI Data Platform, and notable projections for Oracle Cloud Infrastructure (OCI) revenue also reaffirmed the emphasis Oracle is placing on AI. Though not immune to the risks and uncertainties of the AI market at large, Oracle is certainly executing, with the bulk of revenue from AI contracts already booked in its multibillion-dollar remaining performance obligations (RPO) balance. And yet, as OCI becomes a more prominent part of the Oracle business, big opportunities remain for Oracle, particularly in how it partners, prices and simply exists within the data ecosystem.

OCI rounds out the Oracle stack, strengthening its ability to execute on enterprise AI opportunity

2025 has been a transformative year for Oracle. With the Stargate Project — which pushed RPO to over $450 billion — and the recent promotion of two product leaders to co-CEOs, Oracle is undergoing a big transition that aims to put AI at the center of everything. In both AI and non-AI scenarios, the missing piece has been OCI, which plays a critical role in Oracle’s long-solidified application and database businesses.

But now that OCI is transitioning to a robust, scalable offering that could account for as much as 70% of corporate revenue by FY29 (up from 22% today), Oracle is much better positioned than in the early days of the Gen2 architecture. For the AI opportunity, this means using the full stack — cost-effective compute, operational applications data, and what is now a fully integrated AI-database layer to store and build on that data — to guide the market toward reasoning models, making AI more relevant for the enterprise.

Steps Oracle is taking to simplify PaaS have already been taken by others, but the database will be Oracle’s big differentiator

Cross-platform entitlements from the data lake mark a big evolution in Oracle’s data strategy

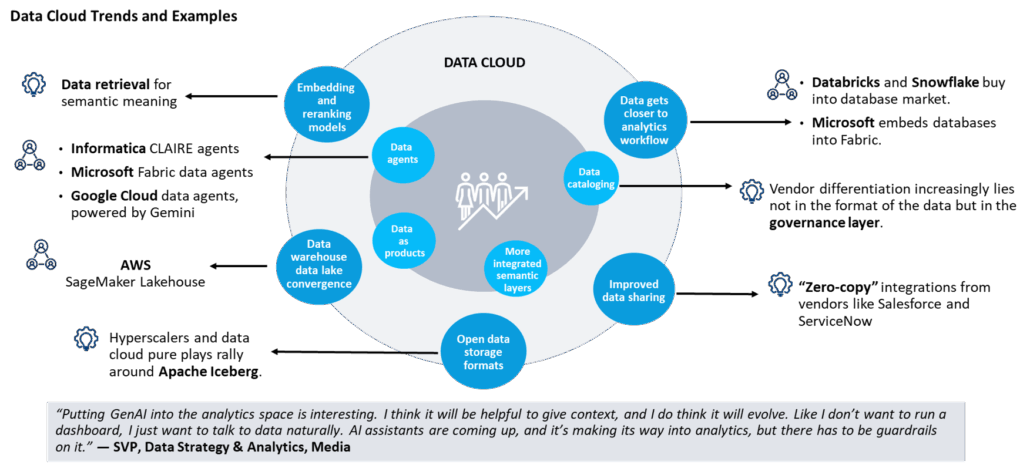

For a long time, most of the market seemed against open standards, but in the era of AI, storing data from disparate tools into a single architecture that works with open formats and engines has become common practice. With SQL and Java, open standards have been part of Oracle since the beginning, but Oracle is pivoting more heavily in this direction, with what seems to be a broader vision to support analytics use cases on top of the operational database, where Oracle is strongest. For example, at AI World, Oracle launched Autonomous Data Lakehouse; given how the market has revolved around data lakes and their interoperability, this launch has been a long time coming.

An evolution of Autonomous Data Warehouse, Autonomous Data Lakehouse is integrated directly into the Oracle Database, meaning the database can connect to the data lakehouse and read and write data in open formats, including Apache Iceberg, as well as analytics engines like Spark that are used to get data in and out of the lakehouse. Aside from reaffirming Oracle’s commitment to open standards and providing a testimonial for the Apache Iceberg ecosystem at large, Autonomous Data Lakehouse sends a strong message to the market that a converged architecture does not equal lock-in; with Oracle, customers can still pull data from a range of databases, cloud warehouses, streaming and big data tools. When it comes to accessing data in applications, this is specific to Oracle applications.

As OCI becomes a more prominent part of the business and agentic AI further disrupts the applications market, it will be interesting to see whether Oracle takes the opportunity to support external applications natively. Last year’s decision to launch a native Salesforce integration within Fusion Data Intelligence (FDI), enabling customers to combine their CRM and Fusion data within the lakehouse architecture, suggests Oracle may be moving further in the direction of delivering its PaaS value outside its own apps base, which would create more market opportunity for Oracle.

The days of Oracle’s ‘red-stack’ tactics are starting to fade

Getting data into a unified architecture is only half the battle; a big gap at the governance layer for managing different data assets in Iceberg format remains. Addressing this piece, Oracle is launching its own data catalog as part of Autonomous Data Lakehouse, which, importantly, can work with the three core operational catalogs on the market: AWS Glue, Databricks Unity Catalog and Snowflake Polaris (open version).

Customers will be able to access Iceberg tables in these catalogs and query that data within Oracle. While for some customers a single catalog with a unified API may be ideal, in most scenarios, running multiple engines over the same data is the motivator. Oracle’s recognition of this is a strong testament to where the market is headed in open standards and in making it easier to federate data between platforms.

AIData platform should provide a lot of simplicity for customers

The Autonomous Data Lakehouse ultimately serves as the foundation of one of Oracle’s other big announcements: AI Data Platform. At its core, AI Data Platform brings together the data foundations — in this case, Autonomous Data Lakehouse integrated with the database — and the app development layer with various out-of-the-box AI models, analytics tools and machine learning frameworks.

Acting as a new OCI PaaS service, AI Data Platform is more a culmination of existing OCI services, though it still marks a big effort by Oracle to bring the AI and data layers closer together, helping create a single point of entry for customers to build AI on unified data. To be clear, this approach is not new, and vendors have long recognized the importance of unifying data and app development layers. Microsoft helped lead the charge with the 2023 launch of Fabric, which is now offering natively embedded SQL and NoSQL databases, followed by Amazon Web Services’ (AWS) 2024 launch of SageMaker AI.

Both offerings leverage the lakehouse architecture and offer integrated access to model tuning and serving tools in addition to the AI models themselves. Of course, in instances like these, Oracle’s differentiation will always rest on the database and the ability for customers to more easily connect to their already contextualized enterprise data in the database for LLMs.

As Oracle becomes more akin to a true hyperscaler, both partners and Oracle must adapt

With AI, platforms are playing a much more prominent role. Customers no longer want to jump through multiple services to complete a given data task. They also want a consistent foundation that can keep pace with rapid technological change. Six of Oracle’s core SI partners are collectively investing $1.5 billion in training over 8,000 practitioners in AI Data platform, suggesting both Oracle and the ecosystem recognize this shift in customer expectations. It also speaks to the pivot Oracle’s partners may be trying to make. As Oracle strengthens its play in IaaS/PaaS, services partners — which still get the bulk of their Oracle business from SaaS engagements — may need to adjust.

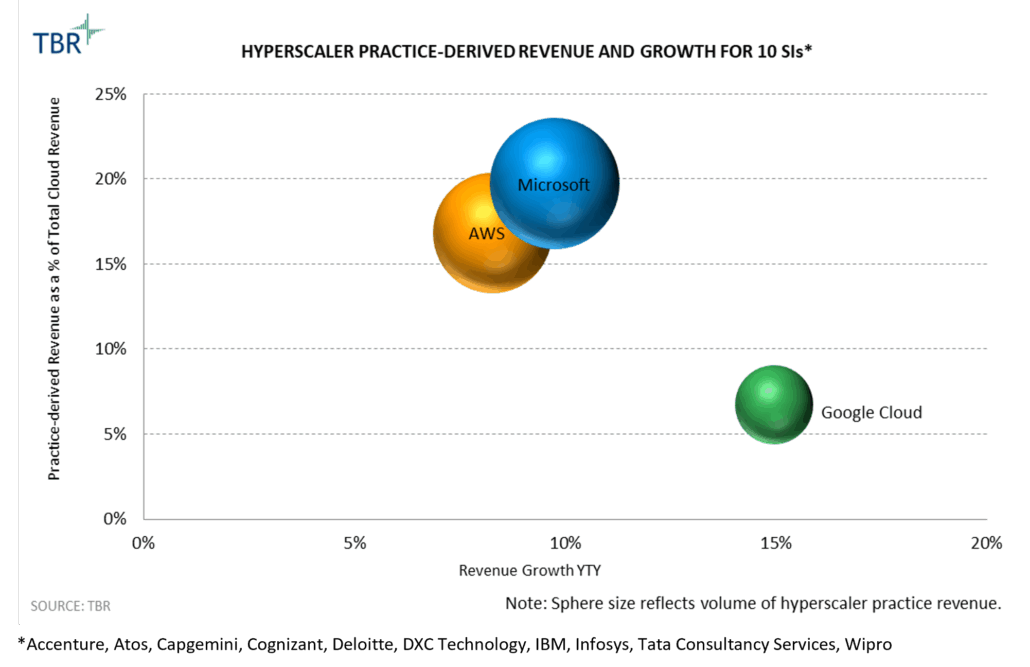

The challenge is that the SIs have already invested so much in AWS, Microsoft and Google Cloud, so viewing Oracle through the hyperscaler lens may be easier said than done. For context, research from TBR’s Cloud Ecosystem Report shows that 10 SIs collectively generated over $45 billion in revenue from their AWS, Azure and Google Cloud Platform (GCP) practices in 2024. Put simply, it may take some effort on Oracle’s part to get SIs to think about Oracle on, say, AWS before AWS on AWS. This effort equates to investments in knowledge management and incentives, coupled with an overall willingness to partner in new ways.

The good news is AI Data Platform, which is available across the hyperscalers, will unlock integration, configuration and customization opportunities, resulting in an immediate win for Oracle in the form of more AI workloads, and eventual sticking points for the GSIs. In the long term, AI Data Platform will serve as a test case for partners’ ability to execute on a previously underutilized portion of the Oracle cloud stack and Oracle’s willingness to help them do so.

Role of SaaS apps pivots around industry outcomes

OCI, including PaaS services like AI Data Platform, is becoming a more prominent part of Oracle’s business. Next quarter (FY2Q26) will mark the inflection point in the Oracle Cloud business when IaaS overtakes SaaS in revenue. But for perspective, a lot of the IaaS momentum is coming from cloud-native and AI infrastructure customers leveraging Oracle for cost-effective compute. Oracle has over 700 AI customers in infrastructure alone, with related annual contract revenue growing in the triple digits year-to-year. Within the enterprise, however, the operational data residing in Oracle’s applications remains integral to the company’s strategy and differentiation.

At Oracle AI World, a lot of the focus was on the progress Oracle has made in delivering out-of-the-box agents not just across the Fusion suite but also in industry applications. Oracle reported it has 600 agents and assistants across the entire apps portfolio, and while the majority are within Fusion, more agents are coming online in the industry suite. These agents will continue to be free of charge, including for the 2,400 customers already taking advantage of AI in Oracle’s applications. While Oracle has long offered a suite of industry apps that are strategically key in helping it appeal to LOB decision makers, Industry Apps will start taking a more central role in Oracle’s strategy, coinciding with the recent appointment of Mike Sicilia, previous head of Industry Apps, to co-CEO.

At the event, it became clear that Oracle is starting to view its applications less as Fusion versus Industry and more as a unified SaaS layer. As customers remain under pressure to deliver outcomes from their generative AI (GenAI) investments, industry alignment will be key, especially as they increasingly find value in using this industry data to tune their own models. As such, TBR can see scenarios in which Oracle increasingly leads with its industry apps, potentially unlocking client conversations in the core Fusion back-office.

With all the talk about catering to outcomes with its industry apps, it will be interesting to see how far Oracle goes to align its pricing model accordingly. It may seem bold, but two decades ago, Salesforce disrupted legacy players, including Oracle, with the SaaS model. Eventually, a vendor will take the risk and align its pricing with the outcomes it claims its applications can deliver.

Final thoughts

The theme of this year’s Oracle AI World was “AI changes everything,” and Oracle is investing at every layer of the stack to address this opportunity. Key considerations at each component include:

- IaaS: It would be very hard to dispute Oracle’s success reentering the IaaS market with the Gen2 architecture. Large-scale AI contracts will fuel OCI growth, making the IaaS business more than 10x what it is today in four years. With this growth, Oracle will give hyperscalers that have been in this business far longer a run for their money. We know OCI will be a big contender for net-new AI workloads. What will be more telling is if OCI can continue to gain share with large enterprises, which are heavily invested with other providers.

- PaaS: Oracle’s steps to simplify the PaaS layer with AI Data Platform, underpinned by Autonomous Data Lakehouse, will help elevate the role of the database within the broader Oracle stack. OLAP specialists will try to disrupt the core database market, and SaaS vendors, even those lacking the storage layer, will position themselves as data companies. Oracle’s ability to deliver a unified platform underpinned by the database to help customers build on their private data in a highly integrated way make it well positioned to address the impending wave of AI reasoning.

- SaaS: Today, cost-aware customers are less interested in reinventing processes that are working; they are investing in the data layer. In the next few years, the SaaS landscape will begin to look very different as a result of agentic AI. With these factors in mind, our estimates suggest the PaaS market will overtake SaaS, albeit marginally, in 2029. In Fusion, Oracle has undergone a big evolution from embedded agents to custom development to an agentic marketplace, but the features themselves are ultimately table stakes. A lot of SaaS vendors have tried and failed to do industry suites well. Oracle’s industry portfolio, though still playing the role of application, represents an opportunity for Oracle to go to market on outcomes and make AI more applicable within the enterprise.

Of course, what this AI opportunity really looks like and when it will fully materialize is up for debate. The amount of AI revenue companies are generating compared to what they are investing is still incredibly small, while AI model customers that are operating at heavy losses but making big commitments to Oracle pose an added risk; though, to be fair, Oracle’s ratio will be far more favorable than those of its peers.

AI model customers that are operating at heavy losses but making big commitments to Oracle pose an added risk. But Oracle AI World only cemented that Oracle believes the risk of underinvesting far outweighs the risk of overinvesting. If the market adapts and customers show their willingness to put their own private data to work, then Oracle’s full-stack approach will ensure its competitiveness.