Pricing research is not always about price

I recently read an article summarizing an onstage interview with Amazon CEO Jeff Bezos at the George Bush President Center. During the interview, Bezos described Amazon’s data mindset:

“We have tons of metrics. When you are shipping billions of packages a year, you need good data and metrics: Are you delivering on time? Delivering on time to every city? To apartment complexes? … [Data around] whether the packages have too much air in them, wasteful packaging … The thing I have noticed is when the anecdotes and the data disagree, the anecdotes are usually right. There’s something wrong with the way you are measuring it.”

This is critical insight for market research practitioners, including those (like myself) focused on pricing. As analysts, we tend to deep dive on the facts and seek hard evidence. We rely on the data to tell the story and articulate the outcomes. Bezos isn’t saying that we should totally discount data. What he’s saying is that data has value when contextualized and re-examined in the context of the actual customer experience.

Pricing is an inherently data-driven exercise. IT and telecom vendors lean on transactional systems, price lists, research firm pricing databases, and other data-centric tools to make pricing decisions and determine appropriate price thresholds. Most of the pricing projects that we do on behalf of our clients start with the question, “Are we competitively priced versus our peers?” That is usually the most basic component of the results that we deliver.

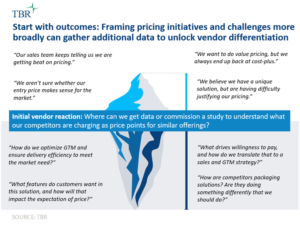

What we’ve found over the years doing this work is that pricing in the ICT space is often more art than science, and that customer anecdotes about pricing are often as valuable and instructive to pricing strategy as the market pricing datasets produced. Our approach to pricing research is rooted in interviews with representatives of the vendor and enterprise customer communities. Often in conducting these interviews, we’ll uncover that the root issues with pricing, which were thought to be associated with the price itself, are often broader issues — something related to value articulation, market segmentation, packaging or delivery efficiency. These aspects influence the customer experience, create pain points, and ultimately dictate willingness to pay and value capture.

When we deliver these results to our pricing research clients, the outcomes are often not only a list or street pricing change, but rather, a rethinking of a broader pricing, go-to-market or customer engagement strategy. Clients will utilize customer anecdotes to rethink how they message a product in their marketing campaigns and content, devise a new approach to customer segmentation, or take a hard look at the delivery cost structure and resource pyramid levels that are driving their price position. In designing pricing research initiatives, we encourage our clients to think more broadly about pricing and incorporate multiple organizational stakeholders into the process, as this can uncover true unforeseen drivers of price position.

How does this compare to your organization’s approach to pricing? How important are customer anecdotes to your pricing processes? Drop a comment here or email me at [email protected].