AMD Lays Out its Road Map to Erode NVIDIA’s Dominance in the AI Data Center

All eyes were again trained on San Jose, Calif., during AMD Advancing AI 2025, held on June 12, just three months after NVIDIA GTC 2025. The event centered on AMD’s bold AI strategy that, in contrast to that of its top competitor, emphasizes an open ecosystem approach to appeal to developers and organizations alike. The entire industry seeks increased competition and accelerated innovation in AI, and AMD plans to fill this void in the market.

Catching up with NVIDIA — can AMD achieve the seemingly impossible?

AMD’s Advancing AI 2025 event presented an opportunity for CEO Lisa Su to outline how AMD’s investments, both organic and inorganic, position the company to challenge NVIDIA’s dominant position in the market. During the event’s keynote address, Su announced new Instinct GPUs, the company’s first rack scale solution, and the debut of ROCm 7.0, the next generation of the company’s open-source AI software platform. She also detailed the company’s hardware road map and highlighted strategic partnerships that underscore the increasing viability of the company’s AI technology.

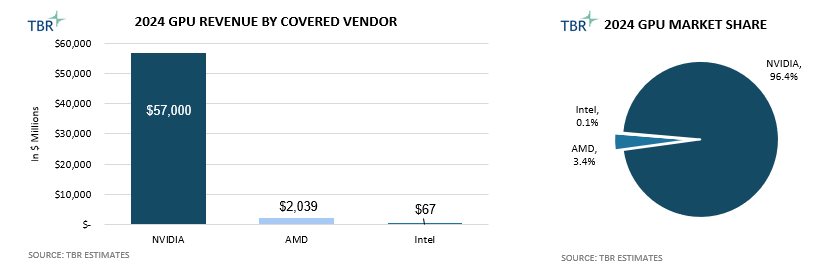

However, NVIDIA’s dominance in the market cannot be understated, and the AI incumbent’s first-mover advantage has created massive barriers of entry to the space that AMD will tactfully need to invest in overcoming. For instance, TBR estimates NVIDIA derived more than 25 times the revenue AMD did from the sale of data center GPUs in 2024. Nonetheless, AMD is committed to the endeavor, and the company’s overall AI strategy is clear: deliver competitive hardware and leverage ecosystem openness and cost competitiveness to drive platform differentiation and gain share in the market.

Acquired assets pave the way for Helios

Su’s keynote address began with the launch of AMD’s Instinct 350 Series GPUs, comprised of the Instinct MI350X and MI355X. The Instinct MI355X outperforms the MI350X but also requires liquid cooling, whereas the MI350X can be air cooled. As such, the Instinct MI355X offers maximum inferencing throughput and is specifically designed to be integrated into high-density racks while the MI350X targets mixed training and inference workloads and is ideal for standard rack configurations. Both GPUs pack 288GB of HBM3e memory capacity — significantly more than the 192GB offered by NVIDIA’s B200 GPUs.

The denser memory architecture of the AMD Instinct 350 Series is a key enabler of the chip’s comparable performance to NVIDIA’s B200 where AMD claims to deliver equivalent to approximately twice the compute performance of Blackwell, depending on the floating-point precision of the model being run. However, even more noteworthy was AMD’s introduction of its open rack scale AI infrastructure, which was made possible by the company’s 2022 acquisition of Pensando Systems.

Along with the acquired company’s software stack, Pensando added a high-performance data processing unit (DPU) to AMD’s portfolio. By leveraging this technology and integrating Pensando’s team into the company, AMD unveiled the industry’s first Ultra Ethernet Consortium (UEC)-compliant network interface card (NIC) for AI, dubbed AMD Pensando Pollara 400 AI NIC, in 4Q24, highlighting the company’s support of open standards.

At Advancing AI 2025, Su formally announced the integration of Pollara 400 AI NIC with the company’s MI350 Series GPU and fifth-generation EPYC CPU to create the company’s first AI rack solution architecture, configurable as an air-cooled variant featuring 64 MI350X GPUs or a liquid-cooled variant featuring up to 128 MI355X GPUs. The development of AMD’s rack scale solution architecture comes in response to the release of NVIDIA’s GB200 NVL72 rack scale solution, with both racks being Open Compute Platform (OCP)-compliant to ensure interoperability and simplified integration with existing OCP-compliant infrastructure.

Going a step further, at the event Su introduced AMD’s next-generation GPU — the Instinct MI400 series — alongside the company’s next-generation rack scale solution, both of which are expected to be made available in 2026. The Instinct MI400 series is slated to deliver roughly twice the peak performance of the MI355X, while Helios — AMD’s next-generation rack scale solution — will leverage 72 MI400 series GPUs in combination with next-generation EPYC Venice CPUs and Pensando Vulcano network adapters. Unsurprisingly, Helios will adhere to OCP standards and support both Ultra Accelerator Link (UALink) and UEC standards for GPU-to-GPU interconnection and rack-to-rack connectivity.

In comparison to the prerelease specs of NVIDIA’s upcoming Vera Rubin NVL72 solution, which is also scheduled to be released in 2026, Helios is expected to deliver the same scale-up bandwidth and similar FP4 and FP8 performance with 50% greater HBM4 memory capacity, memory bandwidth and scale-out bandwidth. However, with AMD GPUs delivering higher memory capacity and bandwidth than equivalent NVIDIA GPUs, this begs the question: Why do NVIDIA GPUs dominate the market?

Developers, developers, developers

At NVIDIA GTC 2025, CEO Jensen Huang said, “Software is the most important feature of NVIDIA GPUs,” and this statement could not be more true. While NVIDIA has benefited from first-mover advantage in the GPU space, currently the company’s GPU release cycle is only slightly ahead of AMD’s in terms of delivering roughly equivalent silicon to market from a compute performance perspective. However, AMD has a leg up when it comes to GPU memory capacity, which helps to drive inference efficiency.

Where NVIDIA’s first-mover advantage really benefits the company is on the software side of the accelerated computing equation. In 2006 NVIDIA introduced CUDA (Compute Unified Device Architecture), a coding language and framework purpose-built to enable the acceleration of workloads beyond just graphics on the GPU. Since then, CUDA has amassed a developer base nearing 6 million, boasting more than 300 libraries and 600 AI models, all while garnering over 48 million downloads. Importantly, CUDA is proprietary, designed and optimized to exclusively support NVIDIA GPUs, resulting in strong vendor lock-in.

Conversely, AMD’s ROCm is open source and relies heavily on community contributions to drive the development of applications. Recognizing the inertia behind CUDA and the legacy applications built and optimized on the platform, ROCm leverages HIP (Heterogenous-computing Interface for Portability) to allow for the porting of CUDA-based code, simplifying code migration. However, certain CUDA-based applications — especially those that are more complex — do not run with the same performance on AMD GPUs after being ported due to NVIDIA software optimizations that have not yet been replicated.

Recognizing the critical importance of the ecosystem to the company’s broader success, AMD continues to invest in enhancing its ROCm platform to appeal to more developers. At Advancing AI 2025, the company introduced ROCm 7, which promises to deliver stronger inference throughput and training performance compared to ROCm 6. Additionally, AMD announced that ROCm 7 supports distributed inference, which decouples the prefill and decode phases of inferencing to vastly reduce the cost of token generation, especially when applied to AI reasoning models. Minimizing the cost of token generation is key to maximizing customers’ revenue opportunity, especially those running high-volume workloads such as service providers.

In addition to distributed inference capabilities similar to those offered by NVIDIA Dynamo, AMD announced ROCm Enterprise AI, a machine learning operations (MLOps) and cluster management platform designed to support enterprise adoption of Instinct GPUs. ROCm Enterprise AI includes tools for model fine-tuning, Kubernetes integration and workflow management. The platform will rely heavily on software partnerships with companies like Red Hat and VMware to support the development of new, use-case- and industry-specific AI applications, and in stark contrast to NVIDIA AI Enterprise, ROCm Enterprise AI will be available free of charge. This pricing strategy is key in driving the development of ROCm applications and the adoption of the platform. However, customers may continue to be willing to pay for the maturity and breadth of NVIDIA AI Enterprise, especially as NVIDIA continues to invest in the expansion of its capabilities.

Partners advocate for the viability of AMD in the AI data center

Key strategic partners, including executives from Meta, Oracle and xAI, joined Su on stage during the event’s keynote, endorsing the company’s AI platforms. All three companies have deployed AMD Instinct GPUs and intend to deploy more as time goes on. These are effectively some of the largest players in the AI space, and their words underscore the value they see in AMD and the company’s approach of driving a more competitive ecosystem to accelerate AI innovation and reduce single-vendor lock-in.

However, perhaps the most noteworthy endorsement came from OpenAI CEO Sam Altman, who discussed how his company is working alongside AMD to design AMD’s next-generation GPUs, which will ultimately be employed to help support OpenAI’s infrastructure. While on stage, Altman also underscored the growing AI market with arguably the most ambitious, albeit somewhat self-serving, statement of the entire keynote: “Theoretically, at some point, you can see that a significant fraction of the power on Earth should be spent running AI compute.” It is safe to say that AMD would be pleased if this ends up being the case; however, for now, AMD is projecting the data center AI accelerator total addressable market will grow to greater than $500 billion by 2028, with inference representing a strong majority of AI workloads.

AMD has become the clear No. 2 leader in AI data center and is well positioned to take share from NVIDIA

AMD’s Advancing AI 2025 event served as a testament, reaffirming the company’s open-ecosystem-driven and cost-competitive AI strategy while also highlighting how far the company’s AI hardware portfolio has come over the last few years. However, while AMD’s commitment to an open software ecosystem and open industry standards is a strong differentiator for the company, it is also a major risk as it makes AMD’s success dependent on the performance of partners and consortium members. Nonetheless, TBR sees the reputation of AMD GPUs becoming more positive, but NVIDIA’s massive installed base and developer ecosystem make competing with the industry giant a significant feat.

Technology Business Research, Inc.

Technology Business Research, Inc.

Technology Business Research, Inc.

Technology Business Research, Inc. Getty Images

Getty Images