Telecom AI Market Landscape

TBR Spotlight Reports represent an excerpt of TBR’s full subscription research. Full reports and the complete data sets that underpin benchmarks, market forecasts and ecosystem reports are available as part of TBR’s subscription service. Click here to receive all new Spotlight Reports in your inbox.

Post updated: Feb. 13, 2026

AI will change the telecom industry, but the timing of this transformation will take longer than anticipated; AI ecosystem is exhibiting bubble behavior, and a reset is likely

A new network architecture is required for AI, as current networks will not suffice

One key aspect of AI workloads, especially those emanating from end-user devices, is that they are uplink-intensive, meaning they rely more heavily on uplink resources from the network than on downlink resources. This is a fundamental issue because macro, cellular-based networks are optimized for downlink capacity (typically a 10:1 downlink-uplink ratio from a resource-allocation perspective). To optimize networks for uplink, CSPs will need to make significant investments in new network technologies and rethink how spectrum resources are utilized.

AI traffic also tends to require lower latency than current networks and can support higher bursts of traffic than video and other media consumption. AI networks require uplink bandwidth, lower latency (compared to current networks) and the ability to handle higher bursts in traffic patterns at scale, and none of these requirements can be achieved just by increasing capacity. These requirements are the opposite of how networks are architected today — optimized for downlink, best-effort or good-enough latency, and optimized for more predictable traffic patterns — necessitating significant investment by CSPs. This will be a gradual transition, as there is no silver bullet to address this problem quickly. The best approach appears to be decoupling the downlink from the uplink to address transmit power asymmetries, enabling network resources to dynamically adapt to traffic demands in real time. Additionally, there is concern as to how willing CSPs will be to invest in uplink when ROI is uncertain.

Hyperscalers’ network needs for AI drives opportunities for CSPs

Hyperscalers’ rapidly expanding AI workloads are reshaping their network requirements, creating opportunities for CSPs. Training and running large-scale AI models demand massive, low-latency, high-capacity connectivity between data centers, cloud regions and edge locations — capabilities that align closely with CSPs’ core strengths in fiber, long-haul transport, metro networks and subsea infrastructure. As hyperscalers prioritize speed of deployment and geographic reach, CSPs can monetize dark fiber, wavelength services, private optical networks and data center interconnection, positioning their networks as enablers of AI scale rather than commoditized connectivity.

AI workloads behave differently compared to traditional network traffic, necessitating changes in networks and their architecture. Some CSPs have become proxies for hyperscalers, such as Lumen and Zayo, which are tackling some of the network needs for hyperscalers by selling dedicated, wholesale capacity (usually transport solutions for data center interconnect [DCI] and metro backhaul). The inferencing opportunity will emerge, likely over the next few years, but clearer ROI will need to be demonstrated to justify the investment necessary to scale.

CSPs have an opportunity to capture meaningful value from AI, but realizing this opportunity requires expeditious action and investment

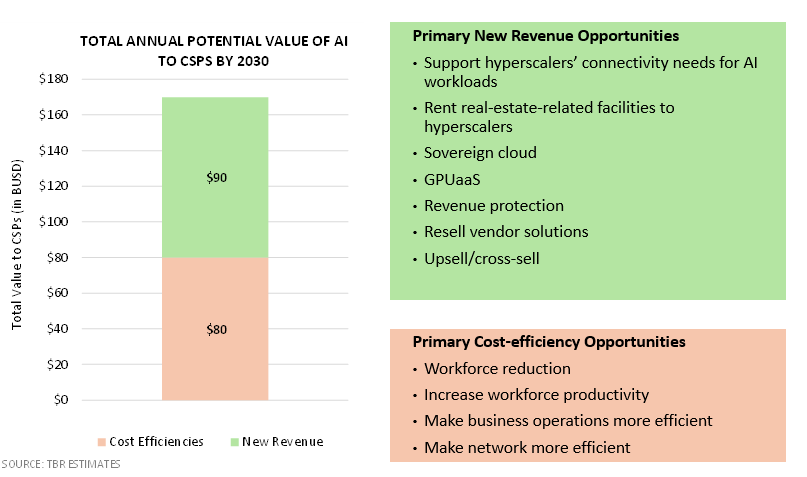

Realizing the $170 billion total annualized opportunity TBR estimates AI presents telecom operators by 2030 requires CSPs to act differently

- Address change management (e.g., workforce training and development; AI impact and implications on day-to-day operations, business processes and customer outcomes)

- Build corporatewide governance framework for how to handle AI internally and between partners and customers

- Build a corporatewide data strategy with long-term road map toward a single, unified platform

- Empower BUs to implement AI in situations where there is a clear ROI

- Make additional budget available whenever clear, ROI-positive opportunities emerge

Hyperscalers are becoming the de facto AI backbone for telecom, reshaping CSP AI strategies and investment priorities

Most hyperscalers are vertically integrated across the AI stack, spanning multiple domains including custom semiconductors (e.g., AWS Trainium and Inferentia; Google Tensor Processing Unit [TPU]), ICT infrastructure (hyperscale data centers and backbone networks), cloud platforms for AI workload hosting, AI-enabled devices (e.g., smartphones and endpoints), foundational AI models (e.g., large language models), and AI software platforms and applications (e.g., Microsoft Copilot, contact center solutions).

Hyperscalers either own, control or hold significant strategic stakes in leading foundational model developers (e.g., OpenAI, Anthropic) while also developing proprietary AI models in-house. In parallel, they design custom silicon optimized for AI training and inference, operate the underlying infrastructure required to run AI workloads at scale, and package AI capabilities into products that can be embedded into enterprise and industry-specific solutions. As a result, hyperscalers are likely to remain the de facto providers of foundational AI models, platforms and tooling that CSPs will leverage for telecom-specific use cases such as network operations, customer care and customer journey orchestration.

The current AI investment cycle, catalyzed by the launch of OpenAI’s ChatGPT in 4Q22, prompted hyperscalers to reassess capital allocation priorities. This has driven a renewed emphasis on centralized hyperscale data centers, which are best suited to the extreme compute density, power availability and cooling requirements of large-scale AI model training. At the same time, investment momentum in edge cloud infrastructure has slowed temporarily, as near-term AI economics favor centralized training and inference.

Nearly all CSPs are expected to rely on hyperscalers in some capacity to enable AI across both network and business operations, whether through public cloud services, AI platforms, foundation models or ecosystem partnerships.

Amazon, Alphabet and Microsoft are pursuing dual strategies: enabling CSPs’ internal digital transformation and AI adoption while also partnering with CSPs to distribute AI-enabled solutions to enterprise and SMB customers.

China’s hyperscalers — notably Alibaba, Baidu and Tencent — are advancing AI strategies broadly similar to those of U.S.-based hyperscalers, including investments in models, platforms and infrastructure. However, large China-based CSPs are simultaneously investing heavily in proprietary AI capabilities, reducing their reliance on hyperscalers relative to peers in other regions. This contrasts with most global markets, where U.S.-based hyperscalers function as the primary providers of AI technologies, both directly and through partner ecosystems.

Technology Business Research, Inc.

Technology Business Research, Inc.

Technology Business Research, Inc.

Technology Business Research, Inc.