AI & GenAI Model Provider Market Landscape

TBR Spotlight Reports represent an excerpt of TBR’s full subscription research. Full reports and the complete data sets that underpin benchmarks, market forecasts and ecosystem reports are available as part of TBR’s subscription service. Click here to receive all new Spotlight Reports in your inbox.

Interest in AI capabilities has not waned as enterprises view the technology as critical to long-term competitive positioning

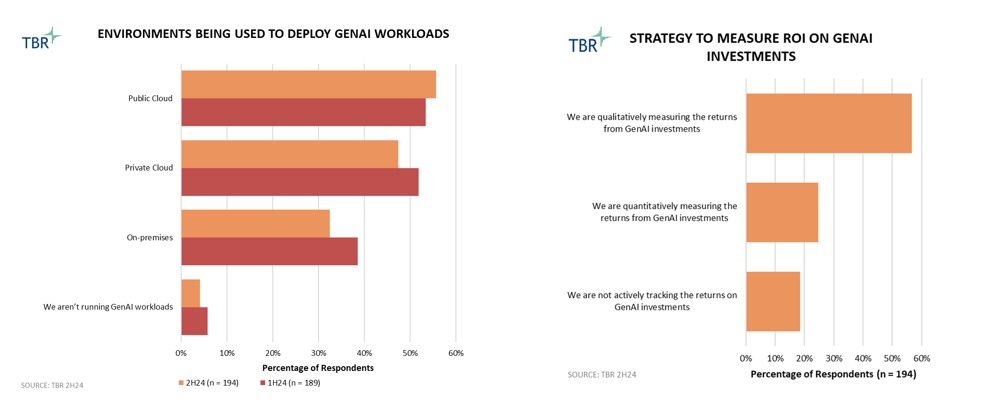

The buzz around GenAI persists as enterprise interest is leading to adoption. Yet it is still early days, and many enterprises remain in exploration mode. Some use cases, such as data management, customer service, administrative tasks and software development, have already moved from the proof-of-concept stage to production. Still, the exploration phase of AI adoption will be a slow burn as enterprises seek opportunities beyond these low-hanging fruit. As seen in the graph to the right, most enterprises are evaluating AI qualitatively, forgoing quantitative measures to keep up with peers based upon the assumption that the technology will bring transformational improvement to business operations.

Reasoning models excel at performing complex, deterministic tasks, and have become the most popular models at the back end of agentic AI

The capability improvement brought by the iterative inferencing process has made reasoning models the focal point of frontier model research. In fact, most of the models sitting atop established third-party benchmarks are reasoning models, except for OpenAI’s GPT-4.5, which the company stated would be its last nonreasoning LLM. Put simply, the difference in output quality is too pronounced to ignore, especially regarding complex, deterministic tasks. As seen in the graph, reasoning models outperform their nonreasoning predecessors across the board, with the greatest distinction appearing in coding and math benchmarks. The strength in complex, deterministic tasks makes reasoning models particularly adept at powering agentic AI capabilities, offering a wider range of addressable use cases and greater accuracy. In addition, reasoning frameworks can be leveraged at any parameter count, with available reasoning models ranging from fewer than 10 billion parameters to more than 100 billion.

As SaaS vendors continue to build proprietary, domain-specific SLMs [small language models] to power their agentic capabilities, incorporating reasoning frameworks will be an important part of their development strategies. Although the capabilities of reasoning models are impressive, the models bring new challenges and are not necessarily the best choice for every application.

Simple content generation and summarization, for instance, do not necessarily require iterative inferencing. Moreover, the greater compute intensity caused by repeated processing at the transformer layer will compound existing challenges to scaling AI adoption. Not only will these models be more expensive to run for the customer, but they will also exacerbate the persistent supply shortages facing cloud infrastructure providers. Microsoft has noted infrastructure constraints as a headwind to AI revenue growth in the past several quarters, and the emerging need for test-time compute adds to these infrastructure demands. As discussed in TBR’s special report, Sheer Scale of GTC 2025 Reaffirms NVIDIA’s Position at the Epicenter of the AI Revolution, NVIDIA’s CEO Jensen Huang stated that reasoning AI consumes 100 times more compute than nonreasoning AI. Of course, this was a highly self-serving statement, as NVIDIA is the leading provider of GPUs powering this compute, but we are dealing with magnitudes of difference. For the use of reasoning models to continue scaling, this high compute intensity will need to be addressed.

SaaS vendors will need to get on board with the new Model Context Protocol to ensure customers can use their model of choice

SaaS vendor strategy assessment

From a strategic positioning perspective, TBR does not expect the rising popularity of the Model Context Protocol to have an outsized impact, primarily because we anticipate all application vendors will adopt the framework to ensure customers can leverage the model of their choice. Furthermore, cloud application vendors are positioned to benefit from the standardization of API calls between models and their workloads. Through a standardized API calling framework, these vendors will be better positioned to drive cost optimization and improve workload management for embedded AI tools.

Recent developments

The Model Context Protocol is becoming the standard: The idea of the Model Context Protocol (MCP) has been steadily gaining popularity following its release by Anthropic in November 2024. At its core, MCP aims to address the emerging challenge of building dedicated API connectors between LLMs and applications by introducing an abstraction layer that standardizes API integrations. This abstraction layer — commonly referred to as the MCP server — would establish a default method for LLM function calling, which software providers would need to incorporate into their applications to access LLMs.

This standardization offers several benefits for model vendors, such as eliminating the need to build individual connectors for each service and promoting a modular approach to AI service integration, potentially unlocking long-term advantages in areas such as workload management and cost optimization.

For SaaS vendors, there is little reason to resist the shift toward MCP, and its growing popularity may make adoption inevitable. Application vendors like Microsoft and ServiceNow have already begun implementing the protocol by establishing MCP servers for the Copilot suite and Now Assist, respectively, and TBR expects other vendors to follow.

It is important to recognize, however, that this approach better suits vendors taking a model-agnostic stance — meaning they aim to empower enterprises to use any LLM to automate agentic capabilities. A possible exception lies with vendors that are less model-agnostic. For instance, Salesforce’s emphasis on proprietary models reduces the need for MCP and favors the company’s focus on native connectors between Customer 360 workflows and xGen models.

Ultimately, TBR expects Salesforce to adopt MCP, but there is an important distinction in how different SaaS vendors may approach standardization. Today, the BYOM [bring your own model] philosophy remains a priority for Salesforce, but if the company were to eventually push customers to use its proprietary models exclusively with Customer 360, its commitment to MCP could be deprioritized in favor of tighter customer lock-in.

Google enhances AI capabilities with the launch of Gemini 2.5 Pro, revolutionizing search functionality, healthcare solutions and multimodal content generation

Google remains differentiated in the AI landscape through the deep integration of its proprietary models across a broad product ecosystem, including Search, YouTube, Android and Workspace. Although many competitors focus on niche capabilities or open-source development, Google positions Gemini as a comprehensive, multimodal foundation model designed for widescale consumer and enterprise adoption. Google’s infrastructure, proprietary TPUs (Tensor Processing Units), and access to vast and diverse data sources provide a significant advantage in training and deploying next-generation models. Gemini 2.5 Pro is a testament to this strength, offering the best performance and largest context window available on the market. Although TBR expects the top spot to continue exchanging hands, we believe Google’s models will remain among the frontier leaders for years to come.

OpenAI advances AI development with GPT-4.5, cutting-edge agent tools and a premium ChatGPT Pro subscription to expand capabilities and improve user experiences

OpenAI is the most valuable model developer in the market today, largely due to the company’s success in productizing its models via ChatGPT. The mindshare generated by ChatGPT is benefiting the company’s ability to reach custom enterprise workloads, though OpenAI must be mindful of the widening gap in price to performance relative to peers. From a sheer performance perspective, TBR believes the company’s emphasis on securing compute infrastructure via the Stargate Project, as well as its ongoing partner initiatives to gain access to high-quality training data, will ensure its models remain near the top of established third-party benchmarks over the long term.

Technology Business Research, Inc.

Technology Business Research, Inc.

Technology Business Research, Inc.

Technology Business Research, Inc.