AI PC and AI Server Market Landscape

TBR Spotlight Reports represent an excerpt of TBR’s full subscription research. Full reports and the complete data sets that underpin benchmarks, market forecasts and ecosystem reports are available as part of TBR’s subscription service. Click here to receive all new Spotlight Reports in your inbox.

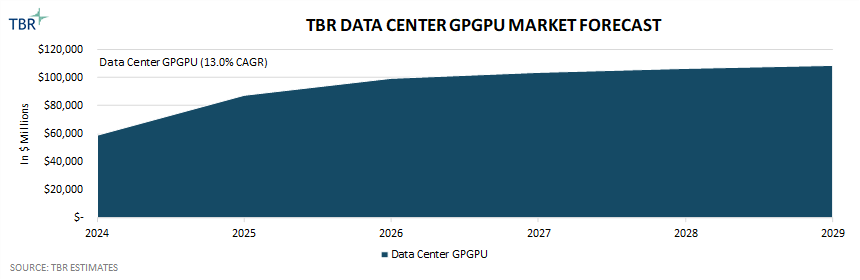

Despite hyperscalers’ increasing investments in custom AI ASICs, TBR expects demand for GPGPUs to remain robust over the next 5 years, driven largely by the ongoing success of NVIDIA DGX Cloud

The world’s largest CSPs, including Amazon, Google and Microsoft, remain some of NVIDIA’s biggest customers, using the company’s general-purpose graphics processing units (GPGPUs) to support internal workloads while also hosting NVIDIA’s DGX Cloud service on DGX systems residing in the companies’ own data centers.

However, while Amazon, Google and Microsoft have historically employed some of the most active groups of CUDA developers globally, all three companies have been actively investing in the development and deployment of their own custom AI accelerators to reduce their reliance on NVIDIA. Additionally, Meta has invested in the development of custom AI accelerators to help train its Llama family of models, and Apple has developed servers based on its M-Series chips to power Apple Intelligence’s cloud capabilities.

However, even as fabless semiconductor companies such as Broadcom and Marvell increasingly invest in offering custom AI silicon design services, only the largest companies in the world have the capital to make these kinds of investments. Further, only a subset of these large technology companies engage in the type of operations at scale that would yield measurable returns on investments and total cost of ownership savings. As such, even as investments rapidly rise in the development of customer AI ASICs, the vast majority of customers continue to choose NVIDIA’s GPGPUs due to not only their programming flexibility but also the rich developer resources and robust prebuilt applications comprising the hardware-adjacent side of NVIDIA’s comprehensive AI stack.

Companies across a variety of industry verticals want to take a piece of NVIDIA’s AI cake

Scenario Discussion: NVIDIA faces increasing threats from both industry peers and partners

NVIDIA GPGPUs are the accelerator of choice in today’s AI servers. However, the AI server and GPGPU market incumbent’s dominance is increasingly under threat by both internal and external factors that are largely related. Internally, as Wall Street’s darling and a driving force behind the Nasdaq’s near 29% annual return in 2024, NVIDIA’s business decisions and quarterly results are increasingly scrutinized by investors, forcing the company to carefully navigate its moves to maximize profitability and shareholder returns. Externally, while NVIDIA positions itself largely as a partner-centric AI ecosystem enabler, the number of the company’s competitors and frenemies is on the rise.

Despite NVIDIA’s sequentially eroding operating profitability, investor scrutiny has not had a clear impact on the company’s opex investments — evidenced by a 48.9% year-to-year increase in R&D spend during 2024. However, it may well be a contributing factor to the company’s aggressive pricing tactics and rising coopetition with certain partners. While pricing power is one of the luxuries of having a first-mover advantage and a near monopoly of the GPGPU market, high margins attract competitors and high pricing drives customers’ exploration of alternatives.

Additionally, the fear of vendor lock-in among customers is something that comes with being the only name in town, and while there is not much most organizations can do to counteract this, NVIDIA’s customers include some of the largest, most capital-rich and technologically capable companies in the world.

To reduce their reliance on NVIDIA GPUs, hyperscalers and model builders alike have increasingly invested in the development of their own custom silicon, including AI accelerators, leveraging acquisitions of chip designers and partnerships with custom ASIC developers such as Broadcom and Marvell to support their ambitions. For example, Amazon Web Services (AWS), Azure, Google Cloud Platform (GCP) and Meta have their own custom AI accelerators, and OpenAI is reportedly working with Broadcom to develop an AI ASIC of its own. However, what these custom AI accelerators have in common is their purpose-built design to support company-specific workloads, and in the case of AWS, Azure and GCP, while customers can access custom AI accelerators through the companies’ respective cloud platforms, the chips are not physically sold to external organizations.

In the GPGPU space, AMD and, to a lesser extent, Intel are NVIDIA’s direct competitors. While AMD’s Instinct line of GPGPUs has become increasingly powerful, rivaling the performance of NVIDIA GPGPUs in certain benchmarks, the company has failed to gain share from the market leader due largely to NVIDIA CUDA’s first-mover advantage. However, the rise of AI has driven growing investments in alternative programming models, such as AMD ROCm and Intel oneAPI — both of which are open source in contrast to CUDA — and programming languages like OpenAI Triton. Despite these developments, TBR believes NVIDIA will retain its majority share of the GPGPU market for at least the next decade due to the momentum behind NVIDIA’s closed-source software and hardware optimized integrated stack.

Microsoft Copilot+ PCs represent a brand-new category and opportunity for Windows PC OEMs industrywide

PC OEMs expected the post-pandemic PC refresh cycle to begin in 2023, but over the past 18 months, their expectations have continually been delayed, with current estimates indicating the next major refresh cycle will ramp sometime in 2025. While the expected timing of the refresh cycle has changed, the drivers have remained the same, with PC OEMs expecting that the aging PC installed base, the upcoming end of Windows 10 support — slated for October 2025 — and the introduction of new AI PCs will coalesce, driving meaningful rebounds in the year-to-year revenue growth of both the commercial and consumer segments of the PC market.

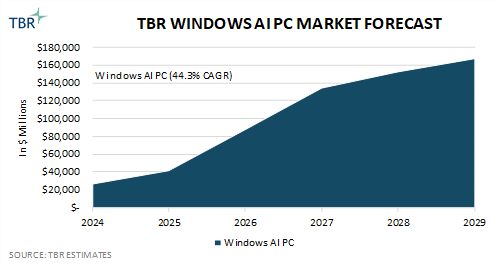

As organizations graduate from Windows 10 devices to Windows 11 devices, TBR expects many customers will opt for AI PCs to future-proof their investments, understanding that the overall commercial PC market will be dominated by devices powered by Windows AI PC SoCs in a few years’ time. However, while TBR expects the Windows AI PC market to grow at a 44.3% CAGR over the next five years, the driver of this robust growth centers on the small revenue base of Windows AI PCs today.

While Apple dominated the AI PC market in 2024 due to the company’s earlier transition to its own silicon platform — the M Series, which features onboard NPUs — TBR estimates indicate that among the big three Windows OEMs, HP Inc.’s AI PC share was greatest in 2024, followed closely by Lenovo and then Dell Technologies. Without an infrastructure business, HP Inc. relies heavily on its PC segment to generate revenue, and as such, TBR believes that relative to its peers — and Dell Technologies in particular — HP Inc. is more willing to trade promotions and lower margins for greater number of sales, which is a key factor in the current increasingly price-competitive PC market. TBR estimates Lenovo’s second-place positioning is tied to the company’s growing traction in the China AI PC market, where the company first launched AI PCs leveraging a proprietary AI agent in a region where Microsoft Copilot has no presence.

The PC ecosystem increases its investments in developer resources to unleash the power of the NPU

Currently available AI PC-specific applications, such as Microsoft Copilot and PC OEMs’ proprietary agents, are focused primarily on improving productivity, which drives more value on the commercial side of the market compared to the consumer side. However, it is likely more AI PC-specific applications will be developed that harness the power of the neural processing unit (NPU), especially as AI PC SoCs continue to permeate the market.

Companies across the PC ecosystem, including silicon vendors, OS providers and OEMs, are investing in expanding the number of resources available to developers to support AI application development and ultimately drive the adoption of AI PCs. For example, AMD Ryzen AI Software and Intel OpenVINO are similar bundles of resources that allow developers to create and optimize applications to leverage the companies’ respective PC SoC platforms and heterogenous computing capabilities, with both tool kits supporting the NPU, in addition to the central processing unit (CPU) and GPU.

However, as it relates to AI PCs, TBR believes the NPU will be leveraged primarily for its ability to improve the energy efficiency of certain application processes, rather than enabling the creation of net-new AI applications. While the performance of PC SoC-integrated GPUs pales in comparison to that of discrete PC GPUs purpose-built for gaming, professional visualization and data science, the TOPS performance of SoC-integrated GPUs typically far exceeds that of SoC-integrated NPUs, due in part to the fact that the processing units are intended to serve different purposes.

The GPU is best suited for the most demanding parallel processing functions, requiring the highest levels of precision, while the NPU is best suited for functions that prioritize power efficiency and require lower levels of precision, including things like noise suppression and video blurring. As such, TBR sees the primary value of the NPU being extended battery life — an extremely important factor for all mobile devices. This is the key reason why TBR believes that AI PC SoCs will gradually replace all non-AI PC SoCs, eventually being integrated into nearly all consumer and commercial client devices.

One of the reasons PC OEMs are so excited about the opportunity presented by AI PCs is that AI PCs command higher prices, supporting OEMs’ longtime focus on premiumization. Commercial customers, especially large enterprises in technology-driven sectors like finance, typically buy more premium machines, while consumers generally opt for less expensive devices, and TBR believes this will be another significant driver of AI PC adoption rising in the commercial segment of the market before the consumer segment.

Technology Business Research, Inc.

Technology Business Research, Inc.

Technology Business Research, Inc.

Technology Business Research, Inc. Technology Business Research, Inc.

Technology Business Research, Inc.